The Geometry Nodes project has just finished its third 2-month cycle. This time the target was an improved material pipeline, curves, volumes and designing a system to pass attributes more directly. To help wrap this up and prepare for Blender 3.0, part of the team gathered in Amsterdam for a nodes workshop from June 22 to 25.

The workshop was organized by Dalai Felinto and Jacques Lucke. They picked the topics to work on, updated its latest designs, and once ready, presented them for further debate to a wider audience (Andy Goralczyk, Brecht Van Lommel, Pablo Vazquez, Simon Thommes, Ton Roosendaal).

The topics covered were:

- Simulation Solvers

- Geometry Checkpoints

- Attribute Sockets

- Particle Nodes

- Physics Prototype

Simulation Solvers

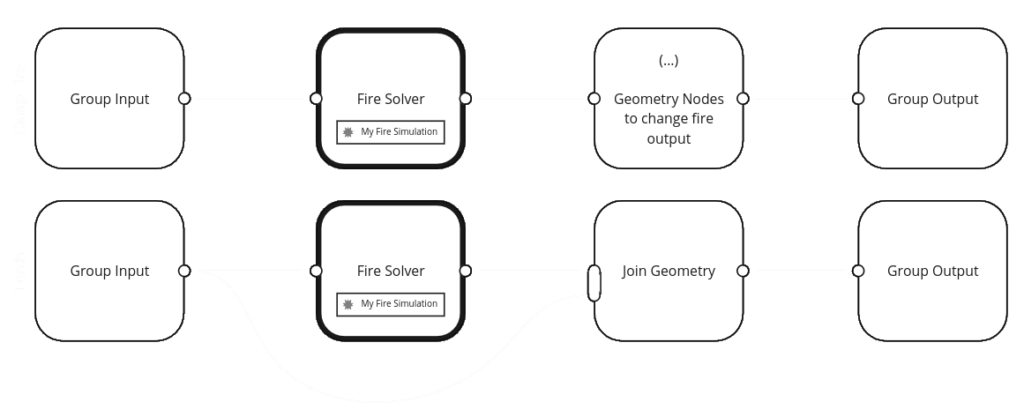

How to integrate simulation with geometry nodes?

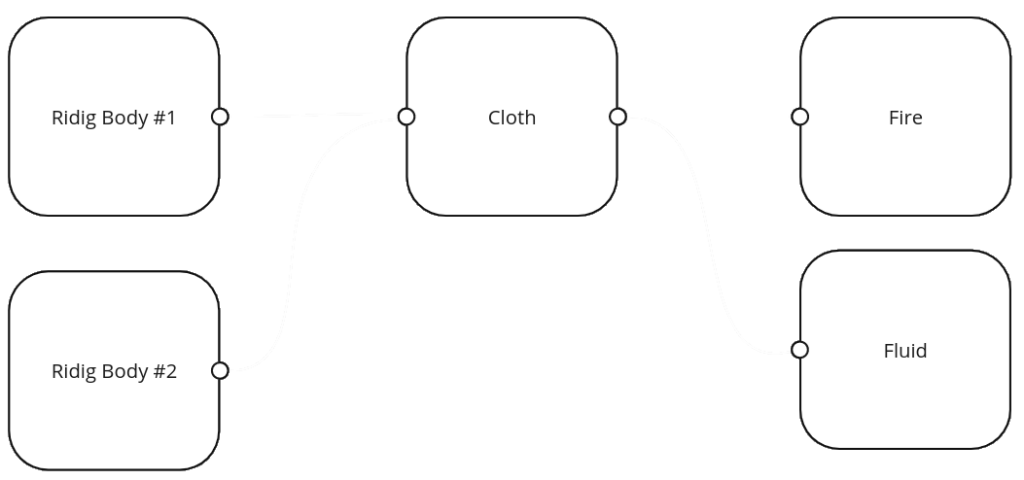

The simulation solvers will be integrated in the pipeline at the Geometry Nodes level. The solver nodes get geometry inputs and outputs, so it can be transformed with further nodes, or even connected to a different solver afterwards. While some solvers can support a single node with inputs and outputs, other solvers will have multiple input and output sockets.

A solver node will point to a separate data-block. The same data-block can be used in different Geometry Nodes trees. This way, multiple geometries can be solved together, each interacting with each other in the same solver (e.g., different pieces of cloth colliding among themselves).

Colliders and force fields will still be defined in the object level. This will allow any object outside the Geometry Nodes system to influence multiple solvers.

Because there is a dependency of solver evaluation depending on how they are connected in the node trees, it is important to display an overview of their relations. In a View Layer level users will be able to inspect (read-only) the dependency between the different solver systems and eventual circular dependencies. Their order is defined in the geometry-node trees though.

Geometry Checkpoints

How to mix nodes edit mode and more nodes?

Sometimes artists need to use procedural tools, manually tweak the work, and then have more procedural passes. For example, an initial point distribution can be created using Geometry Nodes, then frozen. At that point, the artist could go to edit mode, delete some points, move others around, and then use Geometry Nodes again to instance objects.

For this we introduce the concept of checkpoints, a place (node) in the node tree that can be frozen. The geometry created up to that point is baked and can be edited directly in edit mode. Any nodes leading to the checkpoint cannot be changed without invalidating the manual tweaks.

For simulation systems, like hair, this is also desirable. Distribute hair, comb it, add more complexity via nodes, simulate them and add more nodes. Even if the simulated data cannot be edited, freezing the simulation allows for performance improvements. You can simulate one frame, freeze, and still see how the rest of the tree will work animated. Without having the re-evaluate the simulation for every frame.

A live simulation or a baked cache are interchangeable. A node that reads from a cache (VDB, Alembic, etc.) should behave the same way as a solver node. This overall concept was only briefly presented and still need to be explored further and formally approved.

Attribute Sockets

How to pass attributes directly from one node to another?

At the moment each geometry node operates on the entire geometry. A node gets an entire geometry in, and outputs an entire geometry. There is no granular way to operate on attributes directly, detached from the entire geometry. This leads to an extremely linear node tree, which is hard to read.

The initial proposal to address this was called the attribute processor. This was discussed but it faced a few problems:

- No topology change is possible within the attribute processor.

- Having a different context inside geometry node group adds a level of complexity.

- There is no way to pass attributes around different sets of geometries.

- The attribute processor would only work on existing geometry, it couldn’t be used to create new geometry.

As an alternative, the team tried to find ways to handle the attributes as data directly in the node group level. Besides this, there was a pending design issue to be addressed: data abstraction from inside and outside the node groups. In other words, how to make sure that an asset is created and shared for re-usability and all the data it requires is still available once this is used by another object.

This proved to be more tricky than expected. And it is been discussed as a follow up from the workshop. You can check the current proposals here:

Update: There is a design proposal being formalized at the moment to address those problems.

Particles Nodes

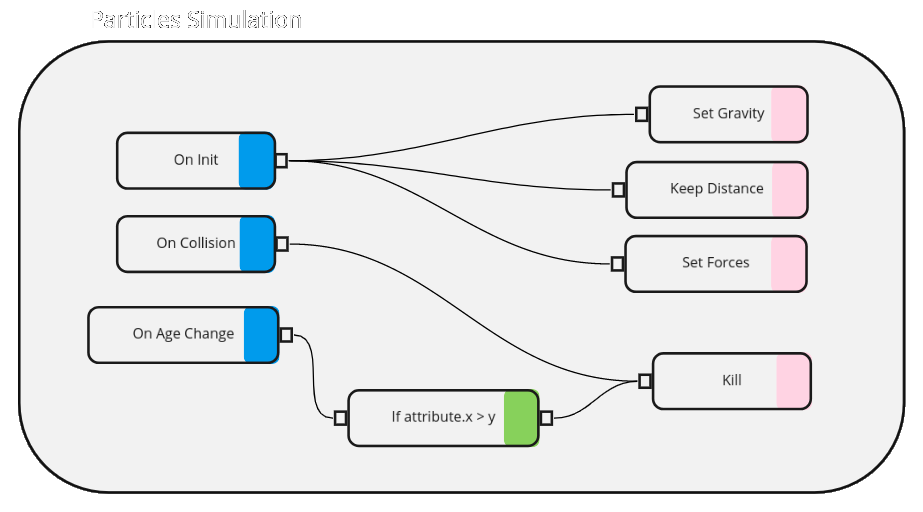

Many existing solvers can work as a black box that receives geometry, extra settings, reads the current view layer colliders and force fields. The particles system however is a box full of surprises nodes instead.

Nothing definitive was proposed, but the 2020 design was revisited see if it would be compatible with the proposed integration with geometry nodes.

- Particle Simulations are solvers but they have their behavior defined by nodes.

- There are three different node types: Events, Condition Gates and Actions:

- Events – On Init, On Collision, On Age

- Condition Gates – If attribute > x, If colliding, And, Or, …

- Actions – Set Gravity, Keep Distance, Set Forces, Kill Particles.

- Similar to Geometry-Nodes, each Action node can either:

- Add or remove a row to the spreadsheet (i.e., create new points).

- Add or remove a column to the spreadsheet (i.e., create a new attribute).

- Edit a cell/row/column (i.e., set particle position).

Physics Prototype

As part of the Blender 3.0 architecture the simulation system will work independently from the animation playback. For this the physics clock needs to be detached from the animation clock.

To address this we will have a prototype to test how this can work both in the UI and the back-end. The scope of this was not covered during the workshop.

Related articles:

Hello I am not a blender user ,I keep track of its progress , is there any possibility that this node workflow can be used to create 2d motion graphics editor inside of a blender (equivalent with afterfx and fusion in terms of result) , it would be very cool if blender becomes a good mograph tool too in future.

Solvers gonna be re-designed?

What kind of sovlers there will be? And what algorithms?

Pic, Flip, Apic, Nflip, Sflip, Asflip: (new technical paper available which looks promising for liquid and sand simulations)

And how about SPH, PBD, MPM or “hybrid” Lagrangian MPM?

Is open source “project chrono” simulation framework familiar / considered option? I guess developers are familiar with all new siggraph technical papers?

How about “taichi elements” – also open source frame work for simulations with gpu acceleration.

And Nvidia physics? they have on github NVIDIAGameWorks where they have flex, physX, GVDB Voxels? Have these been considered?

At least nvidia’s web page say “GVDB Voxels is released as an open source library with multiple samples to demonstrate a range of capabilities.”

Or other open simulation frameworks like: Fluid Engine Dev – Jet – its in github.

And have you tought get more developers for simulations – like in github there is: michal1000w who has realtime GPUSmoke which looks very promising and the developer is talking from this gpu simulator in blender’s developers forum – it can be find from devtalk blender: Realtime GPU smoke simulation

And how about interaction between different solvers? “one-way” and “two-way coupling methods”? Will it be designed to have interaction between all possible physics solvers:

And blender has this volume object – could that be simulated, animated, edited in voxel editor like particles/hairs? (i like to use volume empty – with mesh to volume and especially for procedural modeling with displace modifier when model must have complex volume shapes or 3d tunnels etc. So maybe some simulation modifier would be also lovely feature.)

But what kind of type simulations blender team is considering?

crowds simulations, flocking, car traffic? granular/sand/snow/fire/smoke/fluid/liquid(multiple liquids and gasses with interaction with different colors and textures and with possibility to mix colors / shaders / texture – uv / texture and vertex map advections? ), procedural recursive shattering/fracturing with debris and dust? Floating objects (in gas, liquid, crowd, or like leafs that drop from tree and they go with wind and drop into the water and flow unless they are too heavy: so will there be density that defines will object sink or float – and same in liquids: if oil floating over water because of density difference? – and possibility to “burn” that oil liquid simulation while its floating over water.) cascade simulations, retime simulations? (retime node?), resizer/resample/refine (resample node?), color absorption, waves/ocean, wetmaps/burnmaps/heatmaps (some dynamic paint node?), deformation motion blur support for 3rd part render engines? interaction between: Hair/fur/feather simulations, cloth, rigid/softbodies, (how about muscles/skin/fat, skeletons? and possible to use these in crowd/populated scenes? How about variations / random texturing and procedural crowd generation – possibility to generate multiple different looking characters based on nodes with muscles, clothes and then simulate crowd with actions – attack, run, stop, escape, repel/beat off, jump, walk, look at, climb, fly, sink, dive, die/kill/dead operations, alive, dodge) and how about multithreaded? (i guess that will be important – multithreading) Thenhow about gpu acceleration?

Sorry, big list – just thinking that has these differetn kind of scenarious tought? Or: rain (water, snow, ash?), forrest with leafs that drops and fly in the air while trees are on fire. Fireplace/campfire – fire and smoke and sparks? Or multiple fluids like two different toothpaste with different color or acrylic paint with interaction or two different gases with different color. Burning paper. Tearing cloth? Tearing hairs/ropes? Ropes that are carrying something and when they tear – it will drop that carrying “rigidbody? for example”.

How about Stress/Tension/Velocity/Speed (+ wet/impact/heat etc.) maps? Stress and Tension maps are very useful for wringles and vecolity (vertex based velocity) could be driven to emit particles when things are accelerating (even animated things) or collision maps and wet maps which can also be made to emit particles or even tension and stress maps. So i hope there will be these in nodes too. Also ambient occlusion (or some kind of distance map). Or maybe these could be just Stress/Tension/Velocity/Speed/acceleration/wet/dry/impact nodes and these could be connected into maps generation (with optional: image sequence or vertex/weight map). Maybe some kind of accumulation for remesher (like in bonestudio’s blender build’s remesher modifier has) would be lovely – but with fadeoff / maximum time (how long accumulation last).

I also wish to see some little love for dyntopo – like ADF (adaptively

sampled distance fields) and when using dyntopo: option to melt / merge / conenct vertices – (turn on and off) – for example: i love to use snake hook tool but it doesn’t merge / conenct vertices unless i turn it off and use remesh but remesher might connect things that i don’t want to be merged/”melted”. But also just merge / melt / connect / join (vertices/tris merge/collapse) sculpting brush would be lovely to merge tris that are close enough.

Then there is missing wiggle / jiggle / spring bones (well there are many good addons but they all start to be old – so native integration would be nice when everything nodes will happen).

THen what cycles is missing? Caustics (well these are going to be improved now on cycles-x?) how about support for cctv kind of shader – it was in lw and it was sahder that rendered image (seen by camera) so it could made these “Video Feedback” kind of effects or using second (other) camera view to generate image/texture to shader while rendering just once in specifi angle with selected camera.

Also thinking about these play card – like poker – blender should have some kind of random / index iteration for shaders to make easier multiple play cards with different image maps – at the moment it takes much time to add different texture to multiple play cards when other softwares its possible to select one folder and use all pictures of that folder for different objects (index / hierarchy or randomized). So something similar needed for cycles. There was OSL shader trick for this but it didn’t work in newest blender so i guess osl isn’t working perfectly after all?

And how about some kind of “memory” and/or “dynamic paint” node for geometry nodes? I started to think that these kind of tricks in geometry nodes like here:

https://cgcookie.com/articles/all-the-new-features-and-changes-in-blender-2-93

this one: https://s3.amazonaws.com/cgcookie-rails/uploads%2F1621899296321-geo-nodes-snow.gif with “dynamic paint” / memory node (with dissolve/dry time, smudge, spread, drip, shrink features) would be pretty nice to generate new tools. And this reminds me that there could be accumulate/accumulation node which should work like dynamic paint but in 3d space (like memory falloff in animation nodes extension made by BlueFox Creation). Triggers to active physics and stop them – to generate for example character that will fracture while its walking (turning into pieces while still walking. I mean kind of deformed geometry with – dynamic fracturing).

Sorry my long and boring text. I have seen that blender developers make great work and they have lots of innovations / ideas which are much better than my thoughts. Keep making blender more great – you make great things <3 Blender is awesome just like all blender developers <3

Has the api used by amd’s cyclesx been determined yet?

@Dalai Felinto

“Any VFX industry veterans are welcome to join the Blender project and get involved in the ongoing topics.”

Tell me how I can help! :-)

https://www.imdb.com/name/nm0727947/?ref_=fn_al_nm_1

I’m not a Blender developer but my question is:

What’s your take on the development of geometry nodes? What is it doing right? What other apps are can we take inspiration from? Should it borrow more from Houdini in one sense or another, etc?

Hey Bob. I’m a fan of your videos ;) What you do is already super helpful. As you mentioned in one of them, they reach to the developers (some of them anyways), and is a very solid base of feedback.

I was already planning to reach out to you actually. The modeling team has other artists involved, but there should be room for more people. There is always much that can be tested, feedback, …

If you think that may be interesting drop by blender.chat and reach out to the modeling team (https://blender.chat/channel/modeling-module). Check when is their next module meeting, get a feeling for what is the current agenda for the module, what you can help test, what they need feedback on, which new features need nice demo files, those things.

Let’s rock n’roll!

Dalai’s comment proves what many are criticizing:

The main source of knowledge about workflow for Blender staff: youtubers.

A chance Blender does no politics.

At least Bob is a true pro that is not enclosed in the Blender Bubble.

THAT’S the kind of profile Blender need to get feedbacks from.

I think you’re doing a great job. Thanks!

However, I would like to ask, from a user and training maker POV, how much will be lost with the future direction of the Geometry Nodes. I’m afraid of creating training if the workflow can or will change in the near future. Can you give a clear statement about how the currect knowledge will translate to Geometry Nodes 2.0?

Hi Dimitri,

I have just elaborated this in a more comprehensive post here:

https://devtalk.blender.org/t/files-workflows-and-the-impact-of-geometry-nodes-design-changes/19632

“Any current knowledge is directly applicable to the new attribute changes. But the specific ways of achieving the same effects will improve.”

Hello Blender Devs, most of the things on the blog post sounds amazing but I think rewriting the core of physics in Blender I think would solve a lot of ongoing problems and limitations and performance issues of Blender.

And, in my opinion, I think having colliders and most importantly force fields in the object level is good but I think having them in the geometry node graph and simulation node graph is gonna be way more flexible and useful or at least having the option to have them. Not only it’s gonna give more flexibility and procedural nature but also, it’s going to be an improvement of current system where if you have 4-5 force fields, it kinda gets overwhelming and complicated to control all the force fields with different settings and that often leads to glitches and technical difficulties, I think having them in the node group would not only make them easier to control but also easier to trouble shoot.

Also, in my opinion, instead of rushing to finish everything by 3.0, take your time guys and nail it. Taking feedbacks and design and functionality ideas from people who spend 8 hours a day making complicated FX shots at Weta, ILM would be way way way more valuable that random hobbyists. Good luck guys.

I have a proposal for geometry nodes on RCS that I would love the Blender developers to look at.

Proposal located here: https://blender.community/c/rightclickselect/1Wxm/

Deals with the concept of having ‘lists’ of data values and how to loop over those.

Thanks for your proposal. By the way, my latest proposal about attribute sockets is: https://devtalk.blender.org/t/files-workflows-and-the-impact-of-geometry-nodes-design-changes/19632

What about the actual solvers themselves ? relying only on the age old and slow Bullet along with FLIP, can only get us so far before hitting performance issues and limited functionalities (without talking about their half-backed “pun intended” implementation…), messed up soft-bodies, buggy replay mode, no fluid-rigid two way coupling, no proper fracturing workflow, relying on a relic add-on or a relic separate branch, etc…

If the goal of V3.0 is to set Blender ahead for the next 10+ years, (Cycles X, Geo nodes, asset browser, etc…), then physics should be treated as any other first class citizen, and get more up to date techniques that can fix all the aforementioned problems, one example that comes to mind is “PBD” (and by extension XPBD):

– It can do two way couplings.

– Leverages GPUs/Multi-threaded CPUs.

– Available as open source library under the MIT license.

I completely agree on rewriting the physics system in Blender and treating physics as one of the top priorities of Blender. Pretty much all parts are of Blender are comparable and as good and if not better than other software except physics, and I’m not saying other software have really good built-in tools for physics but there are plugins for particles, rigid body, smoke, fluid and for Blender, there are barely any addon for physics, and to get realistic fractures you have to use an old branch of blender. I think physics has been pretty much ignored in Blender for a really long time and I think it’s finally the time it gets some serious attention.

I am very happy about simulations getting an overhaul. They are probably the weakest area of Blender at the moment.

blender simulator are considered backward, c4d simulation is an antique of the past

This is a amazing news!😀😀

KEEP EM COMING! you guys rock!!

What you guys did in the A Cor da Cidade was so great. And knowing that Geometry Nodes had a small part on this brings me a lot of joy.

Paraphrasing the old say: If a feature is released in the forest and no one makes art with it, was it ever released?

So, thanks for your team for using it. You rock!

I haven’t been following this project as it was in it’s early stage but recently got started to learn the basics since it seems to greatly developed.

So please bear with me if this is a complete noob question and probably asked before.

How will the interaction between the modifiers stack & nodes would become at the end of the final product? Will geometry nodes replace them completely but still u be able to add modifier but under the hood it’s nodegroup or something else?

Most of the modifiers will have a node equivalent (made from a few nodes if needed).

However the modifier stack can (and may) still exist for high level stacking of effects such as deciding on whether you want rigging to happen before or after geometry-nodes (or both even). Besides that, the modifier stack is also the interface to set the nodes inputs.

Why don’t you do these design workshop with FX senior leads and sups from high-end studios instead?

That would give you the best ideas and avoid some bad designs that result from being made people that never did FX jobs.

Totally agree. Decisions and plans are made by people that never left the Blender bubble. They will get there too eventually, sure, but not without making a tons of mistakes, bad decisions that cost time and money, and having to learn the hard way unfortunately.

I have the same impression. Don’t get me wrong, I can’t really express how much I’m excited about development news and I think great people are currently working on the new designs, but I hope more experts in the field can be allowed to give advices and speed up the process, while avoiding unnecessary mistakes and save money as well. From my point of view, Just having used other node based fx software , sometimes makes it seem like blender devs are reinventing the wheel. That being said, I think They are still doing a great work, Just concerned about the fact that It could be done more efficiently. Of course I don’t know how hard it can be to get in touch with experts, especially during pandemic times. Thanks a lot for the hard work you are doing, Keep It up blender Devs, you rock!

Hi Skylar, we normally do. This time we had to settle for the artists at the Blender Studio,.

Travelling restrictions still apply all over Europe. And vaccination is still ongoing around the globe. So for this workshop I was happy enough to see the Netherlands quarantine restrictions to travelers from Germany being lifted.

Hi Dalai,

You know you can do Zoom/Team/Google/Skype meetings to meet the people that would provide the best vision to this project. No need to travel by plane necessarily.. From a covid/2021 point of view, It’s a bit of a fallacious argument.

I think that what you should do is to contact the studios (MPC/ILM/WETA/PIXAR…) that hire the people that are LEGITIMATE to give their feedbacks and vision on this. Give voice to the people that are spending 8 hours a day doing FX on complex scenes, who know what creates the struggle, what does not, what is working well, what is not, what is smart and easy, what is too complicated for no reason, what is easy to extend and improve, what is not…

Instead, you take the key decisions with 5 people, none of them with even a junior level in FX shot creation. That’s not good at all. And this is valid for any part of the software design.

That sounds like a perfect idea to me.

Hi. Zoom/Hangout/… was not an option for this workshop. The main focus was to have Ton’s design view closer to the team developing the system. Ton has some hearing issues that makes any collaboration relying on video camera or audio only not a viable solution.

The design process is intense and I wouldn’t want to do it remotely anyways.

Luckily we are surrounded by artists here (Blender Institute + Blender Studio) that use Blender for VFX for years. It is no lucky actually, but the core reason we work in the same building and we have developers involved in strategic targets to relocate to Amsterdam.

Any VFX industry veterans are welcome to join the Blender project and get involved in the ongoing topics. This is why we work in the public, post the work in progress design for feedback, have open module meetings, …

Was there any effort to collect input from VXF professionals outside of Blender prior to that meeting with Ton? You could of had a meeting with people from all different studios and presented your findings to Ton during this in-person workshop. I’m sure there’s a wealth of wisdom out there that we could all benefit from and easily gathered if only requested.

Your geo nodes 2.0 proposal has a lot of similarities with how Maya’s node paradigm works. Was your second proposal inspired by that or just coincidental? How many other node based programs were look at before development started on geo nodes?

And if it is too much struggle to contact and find the people that are good, instead do a invitation section on your main website with page where motivated people can provide their infos, and linkedin profile, so you can select the most professional and skilled FX artists, and set virtual meeting with them and your team and discuss the design all together. Open-source is not only about sharing code – it is also about sharing decisions.

Not a bad Idea at all!

There are many forums in devtalk. Please Check it. The developers are actively communicating with users.

https://devtalk.blender.org/t/fields-and-anonymous-attributes-proposal/19450

https://devtalk.blender.org/t/attributes-sockets-and-geometry-nodes-2-0-proposal/19459

The problem with devtalk is that the users are not sorted nor identified.

As a result, the forums are polluted by non skilled people with a lot of spare time and with too much self-esteem.

And logically, the best people who are working hard during this time, and are too tired the evenings to talk Blender, are not very well represented, and often ignored because the subject they treat are less “funny” or easy to reply to.

Doing an id and linkedin submission page in order to be heard by the devs, would prune all the imposters and give voice only to people who deserve to be heard.

I’m aware of the topics, and I follow them constantly.

I think dev talk is great, and the devs are doing a great job, overall it seems to be the good direction to me, but what Skylar is saying has some truth imho, maybe a bit extreme, but I do agree that probably, most of the very experienced people in the FX field haven’t really the time to answer on devtalk.

There is still brilliant people contributing, like Erindale, wich is very active for example, or Daniel Bystedt, wich is a great artist and professional and Is actively contributing with invaluable feedback. I don’t know if Linkedin is a good alternative though.

I hope devs takes these posts as constructive feedback, I would like to contribute more myself, and I’ll try If I can!

I meant, Blender.org could have a great page :

“Submit your profile to integrate Blender Design Teams”

where people would give their ID, job title, CV, and preferably linkedin page, so that the Blender team can choose only the most valuable and professional people.

Then, they would set up virtual meeting with them to discuss how things should be done the best way, and share years of experience all together.

This would lead to a way better Blender design, and gather a lot of great ideas to the devs, who could focus more time on doing good code and less time on guessing what would work.

Will there be different node editors for these simulation nodes?

Yes, it should be its own editor, with its own set of nodes.

I understand Blender plans to make “everything nodes” but around how many different total editors are planned? I’m aware of some of them but is there a comprehensive list?

Geo nodes

Simulation nodes (Fire, water, ridged, soft body?)

Particle (hair?) nodes

Sculpt nodes

UV nodes

Texter paint nodes

Animation nodes

Rigging nodes

Render nodes

Grease Pencil nodes

I don’t understand how the team will be able to completely rework the attribute inputs of every existing nodes, just in time before the 3.0 release. It seem like an impossible task (assuming that 3.0 will not leave us with less features than the current 3.0 alpha is proposing?)

The developpement process/planning looks very confusing, I was hoping to get clarifications about this process in this Blog

I would like to know more about this too

The main reason 3.0 was postponed, is to allow the team the time to get the right design in place, as a foundation of what is to come for the following 2 years (and the years beyond that).

The current design will require changes on most of the existing noes. But it is something we can handle with the existing team of core developers and volunteers (geometry nodes alone has quite a few developers involved).

> The developpement process/planning looks very confusing, I was hoping to get clarifications about this process in this Blog

Which part looks confused. Are you talking about geometry nodes or Blender in general?

Well what is very confusing is how the conversion will occur, how long will it takes?

Will the team be able to convert every currently existing nodes just in time for 3.0? It seems that you are very confident about this and confirmed this just above?

Or Maybe we won’t have time to convert everything and some nodes will be removed and left for later, implying a downgrade of some functionalites?

Or perhaps it will be done gradually, and we will have both old and new system co-existing temporarily?

What about retro compatibility? Will nodetrees we are currently working on still work? Should all creators Stop their work right now if they want their nodegroup supported on further release?

Why are some developers still working on implementing new nodes, shouldn’t they wait that this new system is implemented ?

This conversion is quite a large task and imply a lot of changes, for now the information received about this is a bit vague, perhaps the organisation will be done really soon? Less than 4 months to do all this quite a big rush

Yaas finally we might get some usable simulations in Blender!

As a (former?) user myself, I always thought that the simulations in Blender lack “directability”. That is different than having a knob to tweak every possible parameters of the simulation. What I always wanted was a way to direct (as an film or art director) the effects, and have the tools to facilitate that.

I hope that by building more interactive tools (i.e., decoupling from physics and animation playback) and a simpler design we can achieve that.

I think the biggest problem with doing simulation in Blender is the viewport performance, (well maybe except the rigid body because it’s very basic). Like Manta flow can actually give really good results but the user has to pretty much know what each setting would do once the user start baking at high res because it’s super painful to work with when I have any high-res sim in the viewport, the physics part of Blender is the least impressive and useful part of software and the performance is partly to blame because with the current viewport performance someone needs patience of a god to push Manta flow to the limits and deal with constant crashing and lag and everything. I think if Blender gets stability and performance improvement and ram optimization at core level only then simulations in Blender will shine and more people start using it for actual production work. And the quick effects are cool but it’s really basic and there aren’t much of it. Something like quick sea or quick explosion or quick shockwave or quick destruction would be much better. Currently doing any realistic fluid simulation is massive pain because after baking the sim, it’s impossible to do anything and then user have to instance spheres for foam and bubble and spray and Blender starts to choke, it’s a very bad experience. I think the node-based system could solve that with a much easier and procedural way to adding those and also like a proxy system of particles for viewport to correctly represent the simulation of high number of particles. Thanks.

Omg this is finally happening. I’ve been waiting for this for so long. Words cannot describe my excitement for this. Everything sounds music to my ear. All the best luck to the GOD developers.

That’s very good to hear, thanks for your kind words :)

In order to prevent spam, comments are closed 7 days after the post is published. Feel free to continue the conversation on the forums.