EEVEE has been evolving constantly since its introduction in Blender 2.80. The goal has been to make it viable both for asset creation and final rendering, and to support a wide range of workflows. However, thanks to the latest hardware innovations, many new techniques have become viable, and EEVEE can take advantage of them.

A new beginning

For the Blender 3.x series EEVEE’s core architecture will be overhauled to make a solid base for the many new features to come. The following are the main motivations for this restructuring.

Render Passes and Real-Time Compositor

A core motivation for the planned changes to EEVEE’s architecture is the possibility to output all render passes efficiently. The architecture is centered around a decoupling of lighting passes, but will still be fully optimized for more general use cases. Efficient output of render passes is a must in order to use the planned real-time viewport compositor at its full potential. This new paradigm will also speed up the rendering process when many passes are enabled, and all render passes will be supported, including AOVs.

Screen Space Global Illumination

The second motivation for the rewrite was the Screen Space Global Illumination (SSGI) prototype by Jon Henry Marvin Faltis (also known as 0451). Although SSGI is not necessarily a good fit for the wide range of EEVEE’s supported workflows, strong community interest in the prototype shows that there is a demand for a more straightforward global illumination workflow. With SSGI, bounce lighting can be previewed without baking and is applied to all BSDF nodes. This means faster iteration time and support for light bounces from dynamic objects.

A demo of the SSGI prototype branch by Hirokazu Yokohara.

Hardware Ray-Tracing

Supporting SSGI brings ray-tracing support a step closer. The new architecture will make the addition of hardware ray-tracing much easier in the future. Hardware ray-tracing will fix most limitations of screen space techniques, improve refraction, shadows … the list goes on. However, implementing all of these will not be as easy as it sounds. EEVEE will use ray-tracing to fix some big limitations, but there are no plans to make EEVEE a full-blown ray-tracer. For instance, ray-tracing won’t be used for subsurface scattering (not in the traditional way at least).

Note that hardware ray-tracing is not a target for the 3.0 release but one of the motivations for the overhaul.

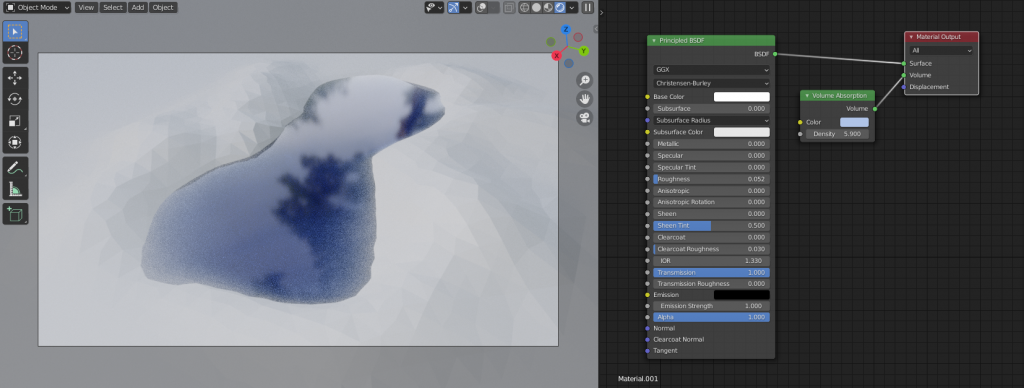

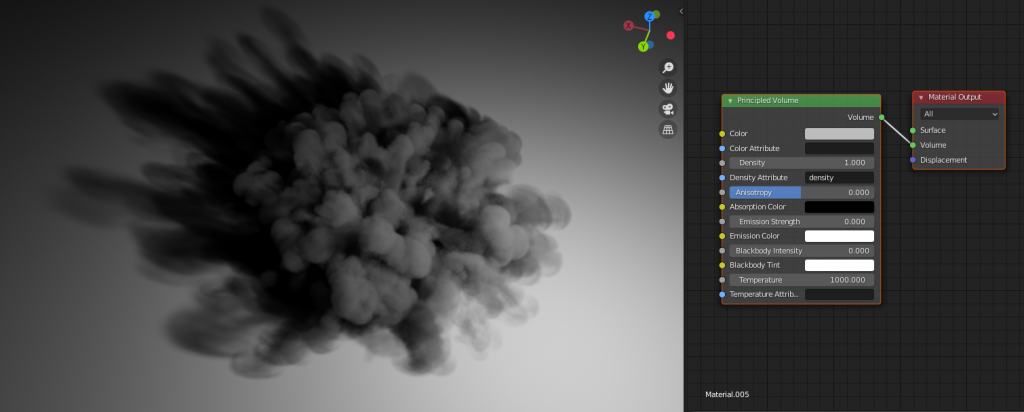

Volumetric Improvements

Volume rendering is also something that should be improved with this update. In the current version of EEVEE released in 2.93, object volume materials are applied to the object’s entire bounding box and are still constrained to a rather coarse view-aligned volume grid.

The goal is to improve that by providing a volume rendering method that evaluates volumes in object space. This means volumes will be evaluated individually and per pixel allowing for high fidelity volumetric effects — without paying the cost of the unified volumetric approach (the current method used by EEVEE). This would also make volume shadow casting possible for those objects.

These changes would make the following use cases trivial to render, compared to current implementation where it would be impossible to get this quality (examples rendered using Cycles).

New shader execution model

The current shader execution is straightforward and executes all BSDF nodes in a material node graph. However, this can become very costly with complex materials with many BSDF nodes, leading to very long shader compilation times.

The approach in the rewrite will sample one BSDF at random, only paying the cost of a single evaluation. A nice consequence of this is that all BSDF nodes will be treated equally with all effects applied (Subsurface Scattering, Screen Space Reflections, etc…).

NPR & EEVEE

Update June 7, 2021:

Non-Photorealistic Rendering (NPR) is an important side of Blender. There is no plan to deprecate the Shader to RGBA node, but it will not be compatible with the new deferred pipeline and all the features that come with it. Any materials using a Shader to RGBA node will be treated like alpha blended material regarding render passes. This is similar to the current behavior, but it might look slightly different if a material uses both NPR and PBR shading.

Moreover, Grease Pencil geometry support is going to bring shadow casting and more flexible shading options. This will be a per material option. The current Grease Pencil material pipeline will remain fully supported and have other goals (more traditional 2D workflow & performance).

And many more …

There is a huge list of features that are just waiting for this cleaner code base. That includes Grease Pencil support, vertex displacement, panoramic camera support, faster shader compilation, light linking… etc.

This is also an opportunity to rewrite the whole engine in C++. Hopefully this will help external contributions and encourage experimentation.

Conclusion

This endeavor has already started in the eevee-rewrite branch. Some features listed in this post are actually already implemented, however at this point it is to early for testing. Soon there will be experimental builds for developers to test. At that time the development team will create threads on devtalk.blender.org to follow feature-specific development.

The target plan for Blender 3.0 is to have all features back with the addition of some new features. The final set of feature targets for 3.0 is not yet decided.

Will this allow for giving Eevee a proper shadow catcher?

boh

What I would really like is multi-GPU support for EEVEE. I can get my four GPU’s to work in my system but it is a hassle and very easy to make a mistake.

How about baking textures with eevee? Right now I still have to use Cycle to bake very basic textures for my needs, I wished I could switch to eevee at some point…

avec mon email

Will the raytracing Eevee get be biased raytracing or distributed raytracing?

Eevee is extremely powerful when used properly. I have used eevee for a large VR project (script was needed for vr rendering, of course) but it had rendered immensly complex scenes with bunch of volumetrics in a few days (with other softwares it would have taken months). And, most importantly, it had rendered scenes without compromises – no lost details and no loss in photorealism.

Most people don’t know how to use it properly and expect its workflow to be identical to cycles’. This is not the case. You need to look at its workflow differently, but there is nothing that it can’t achieve.

Absolutely amazing tool. I can say this without a doubt, as I have experience working with it in production environments. Everyone who says differently just doesn’t know how to use it. But it’s the same with every tool. Bad player always blames the controller.

Hello Clement, with eevee you really made my life , finally i can render flickerfree high resolution animation .

I have 2 questions ,the only things which concern me.

1) when grease pencil will be rendered with eevee, can we finally use it together with alpha blended image planes ?

right now i still have to render all the layers in my scenes one by one and composit them in a videoeditor becasue greasepencil alsways shines through alpha blended materials.

2) can we hope for a speedup when animation is playing back ?

thanx for your hard work !

i think EEVEE is already very amazing strong real-time renderer, i think it just need real-time gi ( not screen space gi, raytracing gi or any method prefered by CLÉMENT FOUCAULT ), and real-time reflections ( not screen space reflections, raytracin reflections or any method prefered by CLÉMENT FOUCAULT.

i realy hope to add real-tim gi and reflections ( but not screen space ) to EEVEE.

big thanks to CLÉMENT FOUCAULT

Good news! But how about lighting leaks and particle nodes? Maybe ray-tracing will do the job, but there is nearly no news about particle node with EEVEE, have been checking the meeting node every week :(

Pssst, real time compositor needs all image scopes

It’s very exciting news. I started using a blender two years ago. And now it’s an indispensable tool in my life. Thank you so much and I always cheer for you. One day, I hope that technologies such as ue5’s nanite and lumen will be applied to the blender.

Godd works developper, but everybody in this community want a system like Nanite and Lumen in UE5

Is also the future and a good integration in blender or a similar system is the road everybody want :)

I love blender, never stop it Good works DEVs <3

“Tenga en cuenta que el trazado de rayos por hardware no es un objetivo para la versión 3.0, sino una de las motivaciones para la revisión.”

lo que deben hacer en realidad es fusionar motores un trazado full “cycles” pero en tiempo real, cuando salió EEVEE estaba muy feliz, ya que aunque no es realista me permite grabar mis animaciones, “cycles” es hermoso pero su costo es el tiempo eevee te da resultados muy buenos en 10s a 40s en cambio “cycles” tarda por la misma escena 2hs, yo poseo una pc de 4 de ram y 2 núcleos nada mas, no me mal interpreten no digo que las nuevas mejoras no estén geniales, lo están, solo digo que cuanto mas rápido pero realista sea blender mejor por esto digo que deben fusionarse ósea inviertan en trazado de rayo en tiempo real.

Featurewise EEVEE is in a such a sad state, that SSGI won’t help it. It’s kind of like introducing diesel engine in the 21st century.

Yeah I was thinking, there are other better solutions other that but maybe because blender is a DCC & not a real game engine that some technical stuff might be possible to achieve.

What about Material View mode and In Front/Clipping Region features? Will it work together somehow again?

I wrote big topic on devtalks about this issue https://devtalk.blender.org/t/wireframes-colors-in-front-clipping-region-issue-or-why-i-still-have-to-work-in-2-79/18687

Let’s go forward! all together.

Clement,

what about VR? is anyone at Blender taking care of it? I know several Blender artists have been using it and we’re wondering what’s gonna happen with it?

thanks for all you do!

I would like to know what amd cyclesx change api internal there is no decision

WOW. I’d simply been hoping for panoramic support, but I guess this is nice too -what a list !

I also had difficulties with volume rendering, since you mention it : a water surface with objects supposed to disappear through… except the volume bounding box didn’t account for the water surface heavily deforming, so floating objects would dip in and out of the volume, changing their color (as it should). I suppose this should solve that.

Thanks !

My biggest hope is that Blender & Eevee improve performance for Animators and catch up to Maya,

Maya’s viewport 2.0 can display realtime displacements,Lights,Shadows,Hair,Shading..etc on complex rigs and yet be able to get constant framerate similar to what Pixar had presented in their 2015 Demo but it’s now outdated and a big hassle to setup…for most Studios in the Industry they have built upon that since Autodesk is slacking & most of them incorporated proper PBR to VP2.

Now it’s for Blender to take the lead and provide them & the community with something much better on a bigger scale, so I urge Clément and the devs to keep this in mind when redesigning Eevee because as you have already seen even Epic games is also trying to provide something similar with UE5, even though most rigging & Animation will still happen in 3d Softwares having the abilitiy to rig & animate complex characters in-engine is going to change how Artists work at least in TV shows & Video Games.

You can do all that in Blender (ok except for tesselation), mesh deformation performance is terrible but I don’t think Eevee is holding things back performance-wise, I mean you can orbit the view in your scene at a decent framerate can’t you ?.

I’m not talkling about just viewport navigation but animating & moving the rigs, they’re extremely slow when you’re in Eevee’s preview modes.

Are they actually slower than in solid mode for you ? My experience tells me that rig evaluation and subdivision surfaces are the bottleneck, but it may not be true in all cases.

The most consequential feature will be the creation of a C++ API for plugin development.

I am very happy to hear this news. After 2.80, EEVEE has almost no major updates. The focus of development has been on Sculpt, GPencil, and UI. Some UI updates are even worse than 2.7x. The modeling hasn’t been updated much, but for most users, modeling and rendering are the most important part. Please put more energy on modeling and rendering, especially EEVEE, this is an opportunity for blender. SDFGI, parallax mapping, panorama rendering, glare fx and more, please. we are really not interested in UI updates. we don’t need industry standards, because this is Blender, not Autodesk.

We don’t need industry standards, because this is Blender…

Those are these imbecile statements and thoughts that were making Blender <= 2.7 a software that was a recurrent joke amount the pros…

That’s since Blender 2.8 and the efforts of being industry standard compliant that the project is respected more and more, that it gets good fundings and that more than 50 users on earth now care about it…

So please don’t bring your toxic mentality of Blender as a UFO software is great…

EEVEE is the main reason why blender 2.8 has received attention, because it makes the workflow more convenient and more possibilities.

If you insist on the industry standard, Is it perfect to import .fbx? and even .3ds and after effects not support in official 2.8 & 2.9, How many people used the Industry Compatible keyboard presets? Will the new toolbar be clicked frequently?

right bro EEVEE was the main reason why blender 2.8 has received attention, but now Unfortunately it will be the main reason why blender has received disattention, i said this beacuase i a big bledner lover

i think EEVEE is already very amazing strong real-time renderer, i think it just need real-time gi ( not screen space gi, raytracing gi or any method prefered by CLÉMENT FOUCAULT ), and real-time reflections ( not screen space reflections, raytracin reflections or any method prefered by CLÉMENT FOUCAULT.

i realy hope to add real-tim gi and reflections ( but not screen space ) to EEVEE.

big thanks to CLÉMENT FOUCAULT

Fbx support is needed indeed. It sucks but it’s widely used in video game.

3ds max format is not industry standard, it is one popular software own format. Same for After effect. Your argument here is off….

I use the industry compatible shortcuts! So speak for yourself. This shortcut set is vastly superior to the default one.

When you are a real pro and that you use various softwares during your day, it is so great to have your shortcuts that remain consistent between softwares. Not to mention the 1234 keys for selecting types is just priceless. Hitting tab for creating node is vastly smarter than shift A. I would invest on these shortcuts since the best cgi artist will Likely come from AD / side Fx softwares…

I donot understand we already using the ssgi addon or even built-in version, what the new , i thought ( 100% ) you will add full ray tracing to eevee in 3.0 , but we very sad for hearing this , all engines and programs in the world have ray tracing, but blender eevee CLÉMENT FOUCAULT in 2021/6 almost 2022 : – EEVEE will use ray-tracing to fix some big limitations, but there are no plans to make EEVEE a full-blown ray-tracer. –

What would be the point of making Eevee a ray tracer? You would just be making it cycles. Cycles is a full blown ray tracer. Use that.

cycles is not a full blown ray tracer. it is path tracer bro, and is need many samples to remove the noise and then it need denoiser, and there are no denoiser working good with animations, Unfortunately CLÉMENT FOUCAULT killed all our dreams .

Path tracing is ray tracing for the special case where you simulate the rays to bounce randomly when shot out. Eevee was always supposed to be a rasterizer, not a ray tracer. If you want that, go make it yourself. I would drop Eevee if it became a raytracer. Even Epic decided adding ray tracing FEATURES to their rasterizer wasn’t worth the trouble and decided to use special structures and sdf’s to get GI in their own rendering pipeline.

You’re complaining for getting a new and improved render engine for free ? I admit that’s bold from them but I suggest being positive about it

i know what the path tracing bro, i think because you and because of the people like you i think i will learning the coding and i will make it myself, by the way it is not too nice to say – If you want that, go make it yourself –

finally , thanks

Have some respect.

First of all, your technical statements show how much you have zero knowledge.

Second, Clement is a great engineer that created an amazing real-time render, you owe him a lot as a user and he killed zero dreams. If you want whitted ray tracing engine and nothing else, it’s your own problem. Go make your own Mental Ray real-time and do a code commit.

i have a lot of respect but i think you donot, i love blender and i very love all CLÉMENT FOUCAULT’s works, but i said my mind,because bledner have almost every thing we want from modeling to unwapping to texturing to texture paint to tracking to compositing to VSE to geometry nodes to grease pencil almost every think we want as users,

in my opinion, if you can to render a scene ( still image or animations ) it very nice to render it on same software that you used while modeling and texturing ( texture paint or unwapping ), with ray-tracing as i know you will have real -time gi and reflections etc, in 5-10-20 second for 1 frame, my dream was this, i know that i can to use unreal or d5 render or twinmotion or any thing but i dreamed to make it will my favorite program ( bledner ), ı mean the main reason is render time without noise like a path tracing renderer, what you think bro, sorry for my bad english,

i hope i can explain

That is NOT a ray tracer. Unreal Engine 5 is not a ray tracer, it uses a rasterization engine. There were attempts at using BVH structures to try to get reflection and GI information, but they decided it was too costly and did something else.

you are right bro , i just meant this ( we need some thing close to real gi and reflection not screen space reflection or screen space gi ), in your option can we have some thing like this in EEVEE in real time without baking ?

thank you very much .

i think EEVEE is already very amazing strong real-time renderer, i think it just need real-time gi ( not screen space gi, raytracing gi or any method prefered by CLÉMENT FOUCAULT ), and real-time reflections ( not screen space reflections, raytracin reflections or any method prefered by CLÉMENT FOUCAULT.

i realy hope to add real-tim gi and reflections ( but not screen space ) to EEVEE.

big thanks to CLÉMENT FOUCAULT

Is SSGI really a good way to go? Given the nature of blender versus a game engine you could keep an entire voxel representation of the scene in memory and do after edit region updating on it to maintain it’s integrity after moving/scaling objects, and then get voxel cone traced or SDF GI instead. I guess blender is open source and I can possibly do it myself…

SSGI is ok, but now that UE5 Lumen exists we would need SDFGI as well or at least VulkanRT-GI. Its simply mindblowing how fast and beautiful Lumen looks.

Very good news !!

Will this finally make Screen Space Reflections and Ambient Occlusion compatible with Screen Space Refraction ?

While the increased focus for PBR for may be good for some, There is others that are really concerned that this rewrite will break Eevee’s and Blender uses for NPR (Toon Shading, Anime and other use cases that may be the opposite of realism). I really think that more assurance is needed for NPR users and companies that use Blender for NPR from Blender Foundation itself.

The article has been updated to address theses worries. There is no plan to remove the Shader to RGBA feature which is the base of the NPR workflow in EEVEE.

I personally don’t need any of these changes, but they are welcomed as long as you keep the node system as flexible as it is now. (or even make it more flexible)

There are lots of usecases for Blender outside of Realism / PBR, which are used by individuals and companies alike.

So there is a concern within these communities that those changes may “break” their Blender.

Any closure on that would be very much appreciated.

Thank you for your hard work.

Will you be keeping the ‘Shader to RGB’ node? Since so many non-photorealistic styles heavily rely on this node to function. A lot of us in the NPR community are worried that we will be pushed out of the Blender family with this update because our goals aren’t centered on realism.

There is no plan to remove the Shader to RGBA node. The article as been updated regarding this.

Sorry to bump into the flow of comments but i have a small issue with teevee :it is limited to max 128 lights in scene……i use about 200 on average…..that limitation cannot be overridden at user end (with the corresponding drop in performance for sure…) ??? Thank you for your answer Clément

This is really awesome !

I was thinking about the POM thread on Devtalk. Is there any plan to merge it ?

Thx again for Eevee,

Francis

Great!!! I welcome very much:)

Y E S ! ! !

Screen Space Global Illumination is an absolute dream come true. You’ve made by day by announcing that, it’s my number one wishlist item for Eevee.

Will this work in conjunction with baked GI and other probes? To use baked lighting information in situations where the rays can not be traced through screen space, and use screenspace GI when it is available?

I think that would offer the best of both worlds in my opinion, since naturally SSGI has screenspace limitations, and it would allow for example the Irradiance Volume probes to be larger, have lower resolution, less baking time, and just exist as a nice fallback solution.

As for Hardware Ray, that will be heaven once that’s landed.

Hardware Ray Tracing will make Eevee an absolute dream to work with. Near Cycles quality, in real time, with no fussing about baked lighting and no screenspace limitations? Where do I sign?

Nice with real-time compositor, but when will real-time rendered EEVEE Scene Strips be implemented in the VSE?

Great plans for future.

What about past issues?

Bump, that broken few years for example?

You guys are on fire.

With ever coming ever closer to cycles features, I wonder if this would be a good time to implement separate UI settings for viewport rendering and final rendering (ie. to allow viewport rendering to be done in EEVEE and final rendering in CyclesX without have to constantly change the render settings in the UI). The default viewport render could be set to “same as render” to mantain the current paradigm for users that don’t want the change.

Absolutely agree! Eevee should have a separate UI settings for viewport rendering and final rendering

Good idea but actually, you don’t think far enough.

Having one place in a static user interface for render settings is what should be completely changed…

Blender should have a fully nodal rendering setup, where the cycles/eevee render settings are 2 nodes.

This way, users could make as many render settings nodes they want and need and connect them to what they want and need. This total modularity lacks in blender 2.x

In conclusion, the problem is this obsolete constrained workflow that needs to be replaced by a new nodal workflow.

Yeah, scene management, I/O and rendering nodes. I would dig that. Kévin is working on alembic procedurals for Cycles which is a step in this direction but sure a procedural scene description of some sort would be nice.

You could have said reduce shader compilation and I would have been happy with that, thanks blender team, performance is literally the only thing we ask for o.o

100% performance! Don’t want to use the program if it doesn’t function… undo taking between 2-5 seconds in material preview sometimes? Eesh!

UE5 has really set a new high especially with Lumen.

I think many will start using it for Rendering until Eevee get some of these features, the results are really incredible.

Amazing !

That’s so nice to read that Eevee in a good way to be even better :)

Do you think it will possible to implement at some point a Nanite like realtime decimation of the scene geo so the renderer can handle virtually any complexity ?

Thanks for your great work.

While it might be possible, in order to achieve it Epic rewrote the entire rendering pipeline, so it’s very unlikely.

I’m no technical expert at all but I think it can be achieved easily by using geometry nodes. If we can make a set up that uses camera distance or view distance to decimate the geometry. Erindale already did something similar with the culling system. The problem with this, however, is that you would have to apply the system to every mesh in the scene, which can really suck and waste time if the scene is big and detailed, which is probably why you would want something this system in the first place.

In order to prevent spam, comments are closed 7 days after the post is published. Feel free to continue the conversation on the forums.