In the last post about the Viewport Plan of Action we ended briefly covering the upcoming realtime engine of Blender 2.8, nicknamed Eevee. Eevee will follow the (game) industry PBR (Physically Based Rendering) trend, supporting high-end graphics coupled with a responsive realtime viewport.

Sci-fi armor by Andy Goralczyk – rendered in Cycles with outline selection mockup, reference for PBR

At the time it was a bit early to discuss the specifics of the project. Time has passed, the ideas matured, and now is time to openly talk about the Eevee Roadmap.

Scene Lights

The initial goal is to support all the realistic Blender light types (i.e., all but Hemi).

We will start by supporting diffuse point lights. Unlike the PBR branch, we will make sure adding/removing lights from scene doesn’t slow things down. For the tech savy: we will use UBO (Uniform Buffer Object) with the scene light data to prevent re-compiling the shaders.

Next we can support specularity in the shaders, and expand the light support to include area lights. The implementation implies we expands the GGX shader to account for the UBO data.

We will also need to rework the light panels for the Eevee settings since not all settings make sense for realtime.

Soft shadow

We all like our shadows smooth as a baby butt. But realistic smooth shadows are computationally expensive. For the realtime mode of Eevee we will follow the shadow buffer implementation we have in Blender now. For offline Eevee rendering (i.e., playblast) we can crank that up and raise the bar.

Regular Materials

Uber Shaders

Things are lit and well, but we still need materials to respond to it.

We will implement the concept of Uber shaders following the Unreal Engine 4 PBR materials. Since Eevee goal is not feature parity with UE4, don’t expect to see all the UE4 uber shaders here (car coating, human skin, …).

An Uber shader is mainly an output node. For this to work effectively we also need to implement the PyNode Shader system. This way each (Python) Node can have its own GLSL shader to be used by the engine.

UI/UX solution for multi-engine material outputs

Multiple engines, one material, what to do?

A material that was setup for Cycles and doesn’t have yet an Eevee PBR Node should still work for Eevee, even if it looks slightly different. So although we want to support Eevee own output nodes, we plan to have a fallback solution where other engine nodes are supported (assuming their nodes follow the PyNode Shader system mentioned above).

Convert / Replace Blender Internal

After we have a working pipeline with Eevee we should tackle compatibility of old Blender Render files. That said, the Cycles fallback option should be enough to get users to jump into Eevee from early on.

Advanced Materials

More advanced techniques will be supported later, like:

- SSS

- Clear Coat

- Volumetric

Image Based Lighting

We will support pre-rendered HDRI followed by in-scene on-demand generated probes. This makes the scene objects to influence each other (reflections, diffuse light bounce, …).

We need the viewport to always be responsive, and to have something to show while probes are calculated. Spherical harmonics (i.e., diffuse only) can be stored in the .blend for quick load while probes are generated.

Time cache should also be considered, for responsiveness.

Glossy rough shaders

Agent 327 Barbershop project by Blender Institute – rendered in Cycles, reference of Glossy texture in wood floor

We can’t support glossy with roughness reflections without prefiltering (i.e., blurring) probes. Otherwise we get a terrible performance (see PBR branch :/), and a very noisy result.

Diffuse approximation

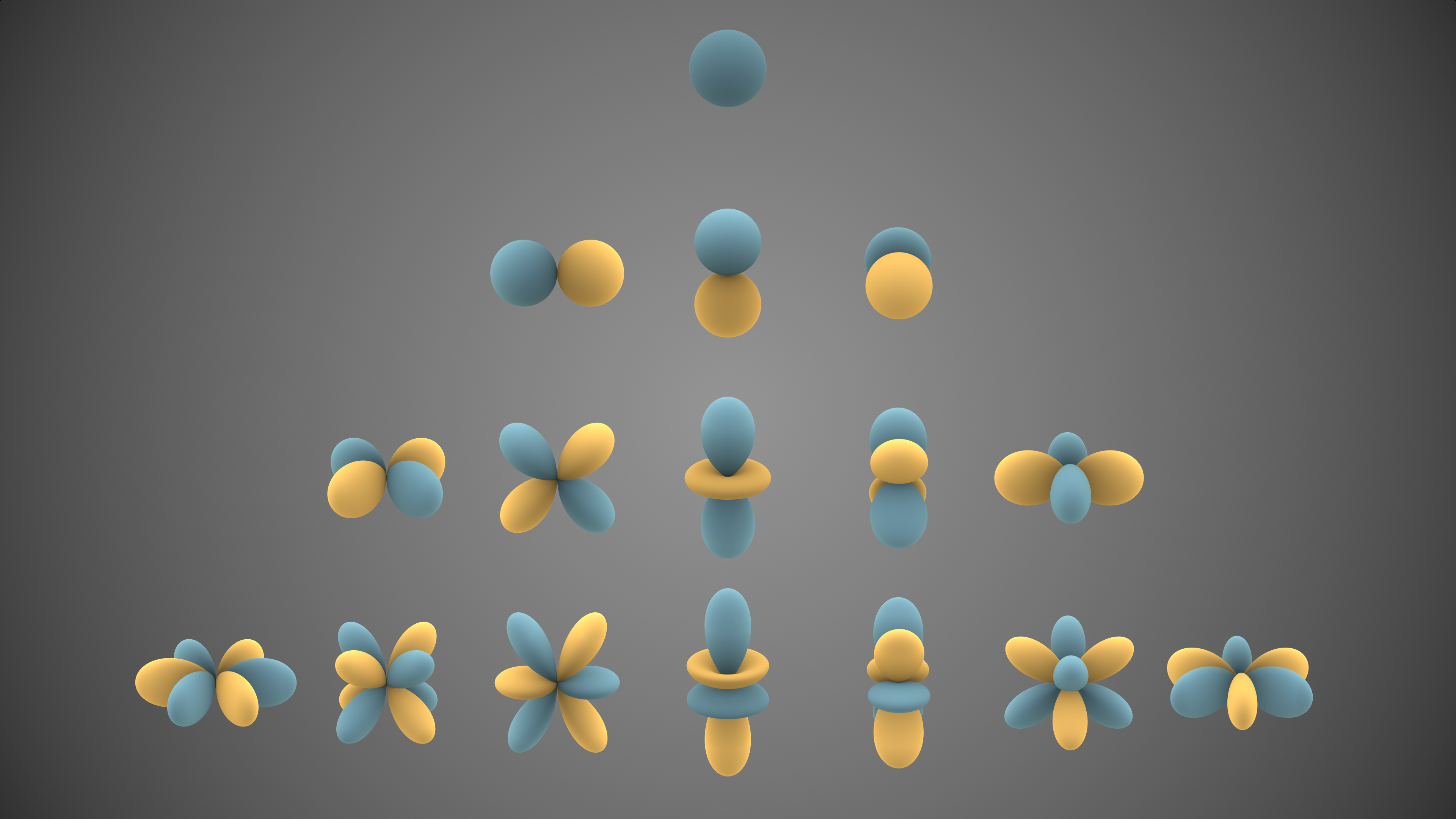

Visual representations of the first few real spherical harmonics, from wikipedia

There are multiple ways to represent the irradiance of the scene, such as cubemaps and spherical harmonics.

The more accurate way is to use a cubemap to store the result of the diffuse shader. However this is slow since it requires computing the diffusion for every texels.

A known compromise is to store low frequency lighting informations into a set of coefficients (as known as Spherical Harmonics). Although this is faster (and easy to work with) it fails in corner cases (when lights end up cancelling themselves).

Eevee will support spherical harmonics, leaving cubemaps solely for baked specular results.

Probe Objects

Like force fields, probe should be empty objects with their own drawing code.

Environment map array

Reference image from Unreal Engine 4, room design by NasteX

Large objects (such as floor) may need multiple probes to render the environment correctly. In Unreal environment map array handles this on supported hardware. This is not compatible with OpenGL 3.3 core. We can still support this (via ARB extension) on modern graphic cards. But we should look at alternatives compatible with old and new hardware, such as tetrahedral maps.

Post Process Effects

For the Siggraph deadline we need the following effects:

- Motion Blur

- Bloom

- Tone Map

- Depth of Field

- Ground Truth Ambient Occlusion

Other effects that we would like to implement eventually:

- Temporal Anti-Alias (to fix the noisy fireflies we get from glossiness)

- Screen Space Reflection (more accurate reflection, helps to ground the objects)

Epilogue

Blender 2.8 Viewport design mockup by Paweł Łyczkowski, suggesting fresnel wires over a Cycles preview

Just to re-cap, the core of the features mentioned here are to be implemented by Siggraph 2017, with a more polished usable version by the Blender Conference.

The viewport project (which Eevee is a core part of it) development is happening in the blender2.8 branch. It is still a bit early for massive user testing, but stay tuned for more updates.

Edit: You can find the Eevee roadmap organized chronologically and with a more technical wording at the Blender development wiki.

Good Blog and thank you for your information. – bola88

agen luckybet88 Trusted Betting Agent, Football Agent, Online Football Gambling, Casino Agent, Trusted SBOBET and IBCBET Agent with 24-hour Customer Service.

Thank you for information , i really like you blog , i waiting you update again :)

Thx for sharing this information, i really appreciate it. Just keep going like this, i’ll be waiting your update. Ciaaa Yoooo and Gomawoo :)

Can I render in distributed/network rendering in blender EEVEE/2.8

When is 2.8 going to be released w eevee? Im getting excited here.

Hi, I have a question. I’m trying 2.80 since a few months. I love the way EEVEE is working, replacing the old Internal Render. But still exists a big deal, for me. In my videos I often mix real footage with rendered objects. I used to work with internal, because in animation it was faster than cycles. Now I’ll try to do the same things with EEVEE. But I cannot do the complex works in compositor that I did with internal, because the render passes of EEVEE are too restricted and there’s no way, at the moment, to override the PBR materials carachteristics. I used to create transparent materials for catching shadows, that is a awesome feature if you have to do deep compositing. I also used render passes as Object Index and Material Index, Specular, Shadow, Emit… DO you think that they will be re-integrated in EEVEE rendering? Thank you, and sorry for my poor English!

Dear Beniamino,

Did you say that you are using the Object and Material Index?

If your answer on my question is yes, could you please tell me how you did it, I can’t find anything about it on the internet in Blender 2.8 (EEVEE).

Regards,

Tim.

Great work

What about equirectangular camera for 360 image in Eevee..

We need it at build version also.

Thank you

Could you bring in B2.8 new particle system?

For example, Unity will get programmable particle system this summer:

https://youtu.be/vj1bUfSVBnY?t=1634 .

Would it be possible something like this in Blender?

With eevee it is impossible to use global illumination?

Con eevee es imposible usar iluminacion global?

Does eevee support Metaball at the moment?

My blender-2.80-ebbb55d-win64 keep crashing if i try render metaball with eevee engine

Thanks to all, but …. I get an error in 2.79a on a new Windows 10 system:

[… opencl_util.cpp: 842] Tesla P100-PCIE-16GB not officially supported yet.

RATS! Not mentioned in any release notes for 2.8. Any idea when it might be supported?

Everything sounds so fine and well but…how does 2.8/eevee affect the blender game engine? I want to create more games on BGE but its engine is not very compatible with other engines of blender. Its not very well translated to blender render or blender cycles.

I hope,I wholeheartedly hope that BGE isnt pushed away or being discarted in favor of animation-centric engines,mainly because BGE has a lot of potential and despite it not being that sophisticated compared to other engines,is still something that I’m just passionate working with.

That being said,the blender team has done astonishing amounts of work and it really shows,I hope seeing some of the improvements also made on the blender game engine.

Hi all,

I love Blender – it’s the best software in the world. Hopefully we will have profits soon and we can sponsor you. Awesome work, guys! A few things to touch:

1/ It would be great to improve animation tools in Blender. Yes, animation needs some care.

2/ Custom colors for wireframe display – now it is hard to tell what is an armature and what is a mesh. Maybe not a big thing, but very useful.

3/ I have an idea to merge Makehuman with Blender and make Makehuman as an option to choose among render engines. Both programs are written in Python and Makehuman seems to stop in developing. Under the Blender Foundation, Makehuman can get a new power. I know that there is a great Manuel Bastioni Lab plugin , but it would be great to have Makehuman integrated in Blender. This feature in Blender will be welcomed by users because creating human avatars is a quite hard work.

What do you think about that, guys?

In game industry this will be a game changer!

I really hope that BGE will go into this.

Go Eevee!

Can I render in distributed/network rendering in blender EEVEE/2.8

A side note; I notice that Unreal 4 has features that allow its current realtime simulation to run at an excellent frame rate.

The core of that is in a few things:

Deferred Shading

Vector field particles(though their implementation of physics currently lacks the sophistication of boids, etc. in blender by miles)

Pre-calculated bounce light via Lightmass

Stationary lights using IES profiles (photometric lights)

Lit Translucency with shading “in a single forward pass to guarantee correct blending with other translucency, which is not possible with multipass lighting techniques. Translucency can cast shadows onto the opaque world and onto itself and other lit translucency.”

I really think the Blender development workings could easily adapt these things and based on what I’ve read, seen, and used. New thoughts on objective work already ongoing and some new. Rock on.

I would like to see bullet, mantaflow, EEVEE code merged then used for realtime simulation in Blender AND as the new default for realtime BGE. While at it, get the GIMP team on board and merge use GIMP code as the core for a sophisticated internal texture studio(all Python). The final touch would be work being done at https://github.com/pybind/pybind11 being implemented. The conjoined work would surpass that of all else and also would open up a new world of design capabilities for designers and become the central utility for exchange(file formatting) as well. Awesome work

Greetings all !

I’m Using a 64-bit Windows 10 and attempted to download Eevee several times but i cant find it in 2.8 Or , 2.9 experimental any other build i think installed.

Can someone please show me how to download and test Eevee, Or port it to version 2.9?

I look over the Eevee project engineering documentation. From the clay section, I am understanding they removed the View port shading tab (Rendered, Material, Textured, Solid, Wireframe, Bounding Box) so as to combine two or three function to be activated in the node compositor. However, this greatly affects the function of the Blender Game Engine. I wondered when they are going to publish how to use these function.

Would be nice if eevee worked with the DISPLACE MODIFIER

and

some ALLEMBIC support would be nice ;)

also some options for geetting rid of that bad anti aliasing ( for final render purposes), aand if the flickering of the lights would be fixed, this would be perfect.

great work guys, thank you, much love. Peace.

Hi Guys ;) Great Job!

Animation support please! ;) It would be very nice if displacement modifier animation works and bone deformation too.

I’m looking forward to animation support.

Cheers from Poland ;)

Diverting away from the topics above. And this may be the completely wrong place for such a question. But is the game engine still going to be available? I have not started to create a game yet but am planning to. This is a game app for android and iOS. Should I use UNITY instead?

I hope it won’t be in real-time render the entire time Im working on scenes.

I’m Sorry but (IMO) I think it would be more Cool if the CMD Windows does not appear when Blender Startup and close. And change it with other startup screen. Blender will look more Professional. Thankyou very much. I love this software very much.

EEVEE + (say in a big booming voice) the pooOOOoowerrrrrrr of a neeeeEEEWww improooOOOved compositoooorrrrtr in OOOOOOooooone VIEeeeewwwWPORrrrrT!!!!!

WOOOOOAAAH! Now that’s a superpower.

SoooooOOOO maybe take a look at Nuke and fusion to see how they deall with it and then in true Blender fashion pimp that viewer ooout! Kkkk

Hmmmmm MMMMMmmmm tasty,

Soooo thePie menus have alot of options so what about 1 more.

Da Daaaaaa ‘Node View’. (Whyeeee have we not done this sooner (palm to forehead then turn to look at camera and hold for effect)).

Edit object, weight paint, texture paint, sculpt,Vertex paint. ………. and then…….

NOOOOODE VIEEEEEEEW. (awwwwwesome)

Just take a second

Absorb it in

and now imagine not having to keep cranking your head across 3 monitors (the wondeerrrrrr , the massages, the women, the liquor……ahhhhh…….. you’re dreams turning into a reality).

The pooooooOOOOWERRRRRRR would be limitless Moh Ho Ho Ho Hooooooo. With the power of the EEVEE UNIVERSE IN YOURRRRRR HAAAAANDSSS! (double awesome)

Perhaps worth a chat by the water cooler?

Love your work guys; really fantastic work. No no no like seriously Awwwwesomely amazing k k koooooolie oooooh work ;D

Can’t wait to see what you guys come up with next.

I hope you understand that taking out the solid mode and wireframe mode will bring you and apocalypses in the general pipelines in every user and NOT ALL PEOPLE has the time and money to get a work station economy is not the same in every country and also NOT ALL the COUNTRIES can get good HARDWARE.

Think about it developers don´t think for only you and don´t think so selfish because you have good machines.

The final result is a heavy process that you can not be watching all the time that insane and no proper for machines in general please do not abuse in that fact.

for processes like modeling is useless to be watching all the time real time you need precision and accuracy also for uv mapping you will ruin the first two important steps on a pipe line alsooooo in SCULPTING is ridiculous to have cycles running all the time for these three areas that are the first three steps after texturing rigging lighting and rendering you are provokinga huge delays on production.

Think twice if you don´t competence with eat you as soon as you can´t imagine.

Greetings

Alright guys, first things first: I’m an imbecile and I’m using Eevee for production work (no, I’m not that stupid, it’s all backed up, but this is more than just a test) that said, it’s a gorgeous renderer, the drawbacks are not even that bad compared to the advantages and the end results speak for themselves. I have a few bug/issue reports.

1.Eevee crashes. When? Open viewport, then node editor on the other side, then switch node editor to viewport. This causes the crash.

2.Shadow resolution is tiny, is there a way to increase it or smooth it? I would appreciate some mechanism similar to what we got with the traditional Blender’s spotlight non-raytraced shadow. I always use that one for cheap shadows.

3.Depth of field is a bit screwy. I’ve seen this problem arise time and again with regular blender’s Internal Defocus node, I don’t know the cause there, and I don’t know the cause here, but the depth of the blur is only one sided. this is, you don’t have a sharp area with blurry in front and in the back. Also, “Distance” seems to work better than focusing on an object.

4.It’s confusing to have the post processing stack and reflections switch in two places. I would appreciate to have it in just one place like rendered layers or some Eevee options tab.

5.Finally, the post-processing effects are not 100% working, I suppose you are aware, but the AO looks kinda weak in some cases (you can’t see it or it has little to no effect), same with the motion blur.

That is all I can remember at the moment, but if that was helpful… I wouldn’t mind beta-testing. Like I said, aside from ugly bugs, I love this renderer, I think it’s gorgeous and I’d love to help shape it (got some experience with UX, too). I will be working with an extensive range of materials on a project code-named “elements” so in case you are interested in a random beta-tester, I can help.

A few thinks

1] Stabilize Eevee because blender crashing like crazy :)

2] Make Big easy to recognize button for Render Image with Eevee engine :)

3] Try do something with options, becouse is hard to go with this all options in render tab [maibe new tab? something like Eevee world?]

4] Generated materials [procerdural materials] on easy objects love crushing blender :).

5] Shadows go to very anoying resolution like 20-40cm tringles [even on Square poly].

6] Look in 3D is OK [bright thing with procedural material] but in Camera the same Object is total black strange :|

7] Changes goes only when changed nodes is unpin and pin again :| [something with refreshing is not OK].

8] When i open old projekt in Cycles and change from cycles to Eevee I can’t ad Eevee type material output, is only Cycles type with “surface”.

Eevee have bright future but need much more work :) I thinking Eevee will be great Engine for people who making np. wizualizations of home [projectants] but this will be only when Eevee will work good.

Sorry for my weak english :)

I’m hoping you won’t get rid of Blender Internal. I am doing animation and depend on BI for fast, clean renders, as well as for features like edges. At a minimum, please let it remain as an alternative engine.

Don’t worry, BI won’t be scrapped. I’m a Blender Internal junkie aswell. I do recommend getting acquainted with Eevee if this is going to be a permanent feature in Blender 2.8.

If you’ve ever used Element 3D (After Effects plugin), you’ll know how comfortable is to have instant feedback with PBR materials.

If you’re worried aboiut rendering with Eevee, right now you can do so by clicking the tiny camera (for a still) or the slate (for an animation) on the bottom strip of the viewport. Where the proportional editing mode and the snap switch are. Right by the right side end. It’s a bit hidden and tricky, but it’s there.

Not sure if that was what you were talking about, but I wish someone had told me about this before.

All you have to do is hide all the gizmos and such, amp the anti-aliasing all the way up and that’s it.

With Cycles, I can render my images all day long with the nodes setup. When I switch over to EEVEE, I cannot see my text, with or without nodes, when I ad dmetallic shader, still nothing. Did I download a bad build?

I was working on another project with similar issues. No matter how I setup the material, before or after using Cycles of Eevee, I cannot view the materials in Eevee.

Any thoughts or suggests would be greatful.

I can not run Blender 2.8. Configuration next: APU A-8 3500M 6620G + 6650M + 8Gb RAM (Win 7 64). The console reports that OpenGL is not support or other. Very strange, I’ll try to update the driver but I’m not sure what will help :( AMD has never been friends with blender…

Will there be any way to actually render things in Eevee, or is it solely to make the viewport look nice?

As a suggestion, you guys might want to officially spell Eevee as “EEVEE” in the final release. That’d avoid potential trademark issues with Nintendo. It’d also better indicate that “EEVEE” is actually an acronym. It’d also avoid confusion between the engine and the Pokémon. Just a thought.

Will Eevee get a Shadow-Catcher?

Bump*

what functionality is Eevee currentlly lacking

in order to be able to serve as a game engine?

is there any plan to replace BGE with Eevee,

or to port the Eevee UI to BGE in the future?

what functionality is Eevee currently lacking

in order to serve as a game engine?

is there any plan to replace BGE with Eevee,

or to bring the Eevee UI to BGE in the future?

I wonder if Blender eevee would do the animation job. I see the animation stuff needs to be improved. (Mesh animation works but Bones? )

1. Will it work with Unity or Unreal?

2. Will Bone Animation on Meshes weight painted work?

3. I am willing to participate in the Test Stage of blender 2.8 “The Eevee Engine” like reporting bugs

My current feedback for this engine is good enough in terms of graphics.

Developers tends to do drafts… Me too.

Sir in eevee mode… shapekeys not working. it would be great if it works in eevee render mode. thank you for great update

Anyone know if the Viewport shading will be added?

When I start Blender, it crashes, and log says Error: EXCEPTION_ACCCESS_VIOLATION.

But if I start with blender –debug-gpu it works fine.

Please do not break compatibility with BI.

Also I agree that the viewport main goal should be to get a good approximation of the final rendering. But for this to happen a good conversion of materials plus light matching from the various rendering engines is required. So I guess it will be a long trip to go. The way MODO gets it is impressive and I believe it should be used as reference.

I like the PBR branch ideas and Armory. A good UE4 exporter/importer would be great also.

Finally, of course getting a good rendering in the viewport makes all the difference in the world. I believe it can’t be stressed enought. To render an animation in minutes instead of hours is simply a CGI revolution.

What about interface simplification, I was thinking about one just mesh type!

Will eevee be-able to export to fbx?

I have been using substance painter and quixel to texture my blender models to take into https://highfidelity.com/ but im finding the fbx/pbr support lacking compared to maya stingray as I have to apply the textures in blender render. Will the eevee update the fbx export to work in a similar way to stingray?

Viewing materials on hair and particles will be great.

Hair please please please plereeeeeease.

Great work guys

Why Eeevee crashes Blender?

Will the material preview of Cycles be the fallback version of Eevee? It would be nice to have that basic functionality without having to switch to Eevee in the engine dropdown menu everytime you want to preview your Cycles materials

Will this viewport benefit from GPU’s?

Mwahahahaha! Should be amazing.

One minor thing:

A blue hemi light on low intensity is a useful simple way to do a sky light. Just because it’s not realistic for a main scene light doesn’t mean it has no use.

The blender render will stil working?

The only issue I see in all this is how could I wait for that stuff to be release without going insane !

This look very promising ! Keep up the good work guys !

Please make sure that you could export the material set up together with the model into UE4 or similar engines, it’d really prove useful and shouldn’t be too hard to implement with the Eevee’s own output node.

So we will be able to define custom shading models for Eevee ? That’s really nice, my main worry was not been able to integrate NPR shaders into the new viewport.

Is Eevee deferred or forward rendered ?

Anyway, thanks for all the great work you guys are doing. Everything I’ve seen so far looks fantastic.

Are probes/environment textures for roughness going to use different blurriness amounts with each level of blurriness stored at a different level of the mipmap-chain so different levels can be mixed together to achieve full gloss or dullness and mostly anything in between?

It’s hard to word this clearly… mipmap level 0:no blur, mipmap level 1:stores some pre-blur, mipmap level 2 stores a bit more pre-blur, etc…

I really hope the developers are keen on concurrent programming. If you’re going for realtime, this new viewport should use every drop of processing power both from multiple cores and GPU. Initially, I thought this was only possible with Vulkan API but Pixar’s demo of USD shows some pretty incredible realtime performance on OpenGL 4. Anyway great stuff!

For Scene Lights and shadows I would like to see the approach from Geomerics enlighten employed. Real-time baking of light maps with interactivity.

http://www.geomerics.com/enlighten/

For shadows, eventually I would like to see usage of newer technologies such as VXGI and could you do vector (SVG) shadow maps instead of bitmaps?

the “Diffuse approximation” image are atom orbitals!

just the angular solutions (legendre polynomials) aka spherical harmonics, as they are called in the images descriptions, whats left to make it an atoms orbital is the radial besselfunctions… ;)

Impressive !

Sounds really really nice.

Its really important not to forget the option of Render Layers. Recently the Mill and ILM presented there work with Unreal Engine in a film enviroment. So it would be nice if this is possible too. Also an important feature is to make it possible to play alembic caches in real time!!! Thats a must.

That would make the blender realtime engine superior in some cases to unreal. Because to work with unreal is a mess when you only want rendered images and not a game.

Please also take a look at http://armory3d.org/ i found it recently and there are nice features in it…. keeep up the good work;) bye

This sounds amazing! and remember to stress performance. It was mentioned in one of the podcast, but that’s important.

What happened to OpenGL render engine?

I tend to agree with YURI and Chris, and that how it works in MODO is pretty awesome.

Will there only be Uber Shaders output? or will we have like in cycles, so if we want we can make our own Uber shader output, i.e. to mimic Unreal Engine 4 material as close as possible in Eevee as in UE4.

There are some pretty big names involved in real-time rendering, that are contributing to the development fund, Valve, Sketchfab. And if I recall Epic once made a donation.

Is it possible to reach out to them, and try to get more people involved and have their help/opinion to achieve this?

If you ever have used UE4, you will be impressed with the viewport performance.

This will be great when 2.8 is out! Thanks and best of luck!

talking about blender internal a bit, is there any plan to port the stress Coordinte system mode to evee or even cycles, it would be so cool to do mix with normals or other effects on viewport ^^

Pre-computed GI?

Sounds very much like what we are working on for the Qt 3D engine too. Lots of fun times and exciting work ahead. :)

Just throwing this out there. One of the things I had always wanted in the BGE was the ability to use Python to change every parameter in every material (some objects have multiple materials) in realtime. The closest thing we had to this was obj.color , which was very, very limited. What if you have a car with a 3-color paint scheme and want to change the colors? You would have to basically have 3 copies of the same object using alpha masks in order to change each color seperately, which is way too complicated. I don’t claim to know how to make this work, it may have even been impossible in the BGE, but please keep this in mind.

Impressive works guys, THANKS, I hope new viewport be useful for Architecture-Visualization and Cartoon Rendering.

Thanks

any plans for Reversed-Z?

http://outerra.blogspot.jp/2012/11/maximizing-depth-buffer-range-and.html

https://nlguillemot.wordpress.com/2016/12/07/reversed-z-in-opengl/

What about rendering out layers/passes ? Will they stay or is compositing all supposed to happen in the viewport?

They will stay at first, though compositing should be able to support real-time engines as well. Perhaps even mixing them both (e.g., Cycles and Eevee composed together).

To illustrate my previous comment: Here are three videos demonstrating how MODO’s Unity shader as well as its Unreal Engine shader (just like MODO’s own Physical Material) all look virtually identical between the viewport and the non-realtime production renderer.

https://vimeo.com/159197577

https://vimeo.com/153409573

https://www.youtube.com/watch?v=9YXH0SrMW8Y

Thank you for sharing this. Is it planned to strive towards visual parity between Blender’s real-time viewport shaders and Cycles materials, and unified look dev workflow?

I.e. will the user get the PBR materials in the viewport automatically while doing lookdev and setting up their Cycles materials – similar to how MODO does it?

Or will real-time PBR viewport shaders/materials have to be set up independently, and Cycles materials re-assigned when the scene is to be rendered in Cycles?

The latter is how Maya does it – where setting up pretty viewport shaders is basically only eye candy in the viewport, but actually useless for final renders because final render materials, be it Mental Ray, Arnold, or whatever, don’t look anything like the final render when seen in the viewport.

Which in turn means that in production it’s rarely worth going through the trouble of making shaders look pretty in the Maya viewport, since you cannot use them for final (non-realtime) renders.

Personally I think the MODO approach is the vastly superior one, and the one all DCCs should strive towards. Give the user as close a representation of their final render (using final offline render engine materials) as is possible in the viewport.

totally agree, and from what i read it seems like it’s going this way, though you can override that behaviour with open gl nodes for some other stuff…

>>Since Eevee goal is not feature parity with UE4…

That’s quite sad, in fact.

How, btw, realtime reflections are going to be implemented? Unity has so-called “reflection probes” for this, but they have some drastic limitations and occasionally may make things ugly (such as floor reflection getting “detached” from the object on this floor as distance between a camera and the object increases). What is Blender devs’ plan for it?

In order to prevent spam, comments are closed 7 days after the post is published. Feel free to continue the conversation on the forums.