Encompassing a broad issue with decentralized code such as real time drawing under the umbrella of the “Viewport” project, might be slightly misleading. The viewport project, essentially encapsulates a few technical and artistic targets such as:

- Performance improvement in viewport drawing, allowing greater vertex counts

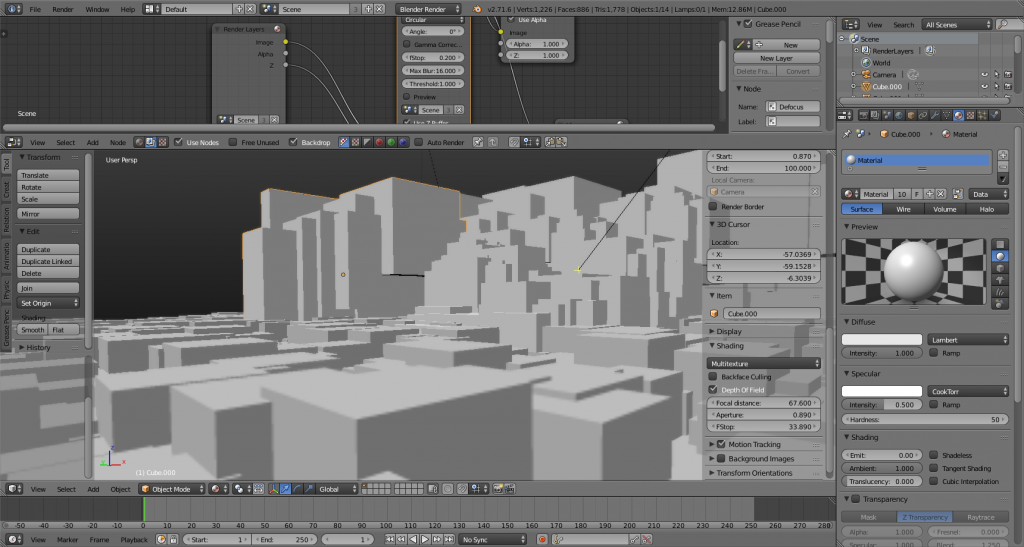

- Shader driven drawing – custom/user driven or automatic for both internal materials and postprocessing in viewport (includes eye candy targets such as HDR viewport, lens flares, PBR shaders, depth of field)

- Portability of drawing code – this should allow us to switch with as little pain as possible to future APIs and devices such as OpenGLES compatible devices

These targets include code that has already been written as part of blender, as part of the viewport GSOC projects by Jason Wilkins, and will also require more code and a few decisions on our part to make them work. One of those decisions is about the version of OpenGL that will be required for blender from now on. First, we should note that OpenGL ES 2.0 for mobile devices is a good target to develop for, when we support mobile devices in the future, given those stats. OpenGL ES 2.0 means, roughly, that we need programmable shading everywhere – fixed function pipeline does not exist in that API. Also, using programmable shading only will allow us to easily upgrade to a pure OpenGL 3.0+ core profile if/when we need to, since modern OpenGL also has no fixed pipeline anymore. For non-technical readers, OpenGL 3.0+ has two profiles, “compatibility” and “core”. While compatibility is backwards compatible with previous versions of OpenGL, core profile throws out a lot of deprecated API functionality and vendors can enable more optimizations in those profiles, since they do not need to take care of breaking compatibility with older features. Upgrading is not really required, since we can already use an OpenGL 3.0+ compatibility profile in most OS’s (with the exception of OSX), and OpenGL extensions allow us to use most features of modern OpenGL. Upgrading to core 3.0 would only enforce us to use certain coding paradigms in OpenGL that are guaranteed to be “good practice”, since deprecated functionality does not exist there. Note though, that those paradigms can be enforced now (for instance, by using preprocessor directives to prohibit use of the deprecated functions, as done in the viewport GSOC branch), using OpenGL 2.1. So let’s explore a few of those targets, explaining ways to achieve them:

- Performance:

This is the most deceptive target. Performance is not just a matter of upgrading to a better version of OpenGL (or to another API such as Direct X, as has been suggested in the past). Rather, it is a combination of using best practices when drawing, which are not being followed everywhere currently, and using the right API functions. In blender code we can benefit from:

- Avoid CPU overhead. This is the most important issue in blender. Various drawing paths check every face/edge state that is sent to the GPU before sending them. Such checks should be cached and invalidated properly. This alone should make drawing of GLSL and textured meshes much faster. This requires rethinking our model of derivedmesh drawing. Current model uses polymorphic functions in our derived meshes to control drawing. Instead, drawing functions should be attached to the material types available for drawing instead and derived meshes should have a way to provide materials with the requested data buffers for drawing. A change that will drastically improve the situation for textured drawing is redesigning the way we handle texture images per face. The difficulty here is that every face can potentially have a different image assigned, so we cannot make optimizing assumptions easily. To support this, our current code loops over all mesh faces every frame -regardless of whether the display data have changed or not- and checks every face for images. This is also relevant to minimizing state changes – see below.

- Minimize state changes between materials and images. If we move to a shader driven pipeline this will be important, since changing between shaders incurs more overhead than simply changing numerical values of default phong materials.

- Only re-upload data that need re-uploading. Currently, blender uploads all vertex data to the GPU when a change occurs. It should be possible to update only a portion of that data. E.g, editing UVs only updates UV data, if modifiers on a mesh are deform type only, update only vertices etc. This is hard to do currently because derivedmeshes are completely freed on mesh update, and GPU data reside on the derivedmesh.

- Use modern features to accelerate drawing. This surely includes instancing APIs in OpenGL (attribute, or uniform based) – which can only be done if we use shaders. Direct state access APIs and memory mapping, can help eliminate driver overhead. Uniform buffer objects are a great way to pass data across shaders without rebinding uniforms and attributes per shader, however they require shading language written explicitly for OpenGL 3.0+. Transform feedback can help avoiding vertex streaming overhead in edit mode drawing, where we redraw the same mesh multiple times. Note that most of those are pretty straightforward and trivial to plug in, once the core that handles shader-based, batch-driven drawing has been implemented.

- Shader Driven Drawing

The main challenge here is the combinatorial explosion of shaders (ie shader uses lighting or not, uses texturing or not, is dynamically generated from nodes etc,etc). Ideally we want to avoid switching shaders as much as possible. This can be trivially accomplished by drawing per material as explained above. We could probably implement a hashing scheme where materials that share the same hash also share the same shader, however this would incur its own overhead. Combinations are not only generated by different material options, but also from various options that are used in painting, editors, objects, even user preferences. The aspect system in the works in the GSOC viewport branch attempts to tackle the issue by using predefined materials for most of blender’s drawing, where of course we use parameters to tweak the shaders. Shader driven materials open the door to other intersting things, such as GPU instancing, and even deferred rendering. For the latter we do some experiments already in the viewport_experiments branch. For some compositing effects, we can reconstruct the world space position and normals even now using a depth buffer, but this is expensive. Using a multi-render target approach here will help with performance but again, this needs shader support. For starters though we can support a minimum set of ready-made effects for viewport compositing. Allowing full blown user compositing or shading requires having the aforementioned material system where materials or effects can request mesh data appropriately. Shader driven drawing is of course important for real time node-driven GLSL materials and PBR shaders too. These systems need a good tool design still, maybe even a blender internal material system redesign, which would be much more long term if we do it. Some users have proposed a separate visualization system than the renderers themselves. How it all fits together and what expectations it creates is still an open issue – will users expect to get the viewport result during rendering, or do we allow certain shader-only real time eye candy, with a separate real time workflow?

- Portability

Being able to support multiple platforms – in other words multiple OpenGL versions or even graphics APIs – means that we need a layer that handles all GPU operations and allows no explicit OpenGL in the rest of the code, allowing us to basically replace the GPU implementation under blender transparently. This has already been handled in the GSOC viewport 2013 branch (the 2014 branch is just the bare API at the moment, not hooked into the rest of blender), with code that takes care of disallowing OpenGL functions outside the gpu module. That will mean GLES and mobile device support support, which is something Alexandr Kuznetsov has worked on and demostrated a few years back.

- Conclusion

As can be seen some of those targets can be accomplished by adjusting the current system, while other targets are more ambitious and long term. For gooseberry, our needs are more urgent than the long term deliverables of the viewport project, so we will probably focus on a few pathological cases of drawing and a basic framework for compositing (which cannot really be complete until we have a full shader-driven pipeline). However in collaboration with Jason and Alexandr we hope to finish and merge the code that will make those improvements possible on a bigger scale.

Blender could be easily expanded into a VJ tool, only if some basic options like ui midi mapping and live microphone input in viewport were available. Personally I don’t think it’s that hard to implement. It can utilize a basic EQ to push values to a driver. I personally tried implementing this, but the problem was that my code was initializing dependencies for input access on every frame. I had a piece of code that was added to driver namespace. Please consider this. I believe it is a minor addition but opens immense possibilities and creates a vibrant sub-community.

Keep @ it !

this is a very important piece of the puzzle.

What is the “wiggly-widgets”? Do you have something to do with the Viewport project?

Can’t wait for these features.

Will this affect the BGE?

Faster draw call batching?

DOF is plugged out for now, there’s no sense in trying to make it work yet. Branch will be on hiatus for a while till more important work is done.

Built the latest on win7 @ Hash: c4b6d60

Great works to all of you guys :)

I noticed that when I check on the depth of field box, it turns on the ssao :p

Have been able to get the depth of field working yet…

Will keep plugging away at it.

Again great work :)

Cheers,

~Tung

It would be interesting in Blender, using the Gooseberry Project, added the ability to use the widget rigs such as Marionette and Presto (Pixar). Would allow having cleaner rigs for the animators.

OK, someone tipped you didn’t they? Admit it!

Have you ever thought about the possibility of offering along with Cycles, a more complete option for rendering using OpenGL?

Something like a full render in opengl / directx?

I know that Blender currently has the option to render with OpenGL, but it’s so limited …

I’ve seen some people using the EU4 and Unity to “render” animations,

I do not know if I explained right, but it would be possible?

Some examples: youtube.com/watch?v=Quc3Qq2rWCA / youtube.com/watch?v=89V-Rn2-5z4 / UE4 youtube.com/watch?v=ypKaMBWeQj0

BEER Project may be rendering using OpenGL as core code. Details will be presented at Bconf 2014.

Yes we have! Some parts of blender already do, such as the compositor scene strips. Of course for that we really need the extra quality that full shaders can offer.

I hate when a real time game looks better then my hour plus render.

im not sure if i am barking up the right tree or not but i do know that some people on youtube use valves game engine to create videos for their youtube blogs and just use screen capture software to create the video plus some extra video editing they have their episode, but also in unity 3d you dont just have to create games but you can also create realtime movies, it is a little but extra work but it is exactly the same as creating an animation in say blender but you are creating it in unity 3d a game engine, and the only difference is that most of the interaction has to be programmed.

It’s hardly on topic but…

From what I understand about OpenGL (knowledge just after magic horns) and after reading this very informative article, having shader based OpenGL is a huge step forward also in this direction.

But ehm its 2014, there are a few version of OpenGL, the most recend version is 4.5. see http://www.pcper.com/news/General-Tech/Khronos-Announces-Next-OpenGL-Releases-OpenGL-45

You write that you have to make a decision for a long time i suppose then you set with most current technology and not with something from a few years back? Beware dough that there are other alternative Open versions of OpenGL look alikes, these days too. In fact its pretty hot right now thanks to an nvidea amd vs microsoft battle, openGL (and variations) are primed as alternatives to directX, as MS is getting down in sales.

We already have code using OpenGL 3.0 + extensions in blender. A move to a “newer” OpenGL serves little apart from marketing. What we really need right now is results – better performance, compatibility, wide audience. If code is performant users should not really care what we use underneath. It may well be pink unicorns drawing pixels in the framebuffer with their magic horns.

indeed that is true, blender could use every version of open GL, every version of Direct X and all of the others combined, but in the end of the day if those implementations aren’t improving blenders performance in a noticeable way to the end user then what is the point.

Well is with all software, later versions tend to have more bug fixes, better and faster coding. I dont see many people using the first versions of blender either…its not only marketing. And the opensource versions dont care much for marketing either, they just improve their code

Hi Guys, is there a chance to see NVidia VXGI implemention, in Viewport project ?? Thanks, Jozef

I think users will appreciate the effort that will go to a full featured implementation then just some quick patch fixes.

Sounds nice. After Gooseberry is finished it would be ok if the whole release that will come after it would be dedicated to Viewport only. Many users would be happy about it but ofcourse you have the last word.

In order to prevent spam, comments are closed 7 days after the post is published. Feel free to continue the conversation on the forums.