Article by Kévin Dietrich

Facebook (now Meta) joined the Blender development fund during 2020 with the main purpose of supporting the development of Cycles. A team from Facebook Reality Labs led by Feng Xie and Christophe Hery are interested in using Cycles as a renderer for some of their projects. They chose Cycles because it is a full featured production renderer; however, they are also interested in improving Cycles’s real time rendering capabilities and features for high quality digital human rendering.

There are three main areas of collaboration:

- Scene update optimization: reduce synchronization cost between the scene data and their on-device copies to enable real-time persistent animation rendering.

- Add native procedural API and Alembic procedural to accelerate baked geometry animation loading and real-time playback.

- Better BSDFs models for skin and eye rendering. In particular, we want to support the anisotropic BSSRDF model important for skin rendering, and accelerate the convergence of caustic effects important for eye rendering (planned).

A short paper about the cloud based real time path tracing renderer Feng’s team built on top of Blender-Cycles will be presented at Siggraph Asia 2021.

This article will discuss the work done so far.

Scene Update Optimization

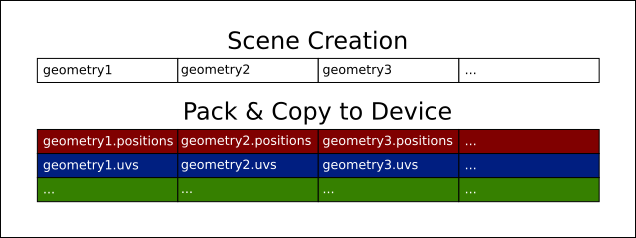

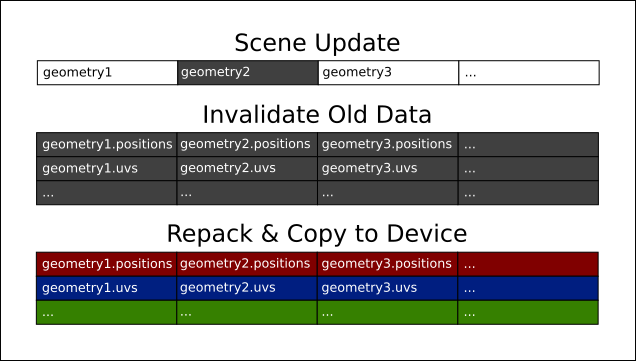

In order to support as many platforms as possible, Cycles stores the data of the whole scene in unified, specific buffers. For example, all UVs of all objects are stored together in a single contiguous buffer in memory, similarly for triangles or shader graphs. A major problem with this approach is that if anything changes, the buffers are then destroyed before being recreated, which involves copying all the data back, first into the buffers, then secondly to the devices. These copies and data transfers can be extremely time consuming.

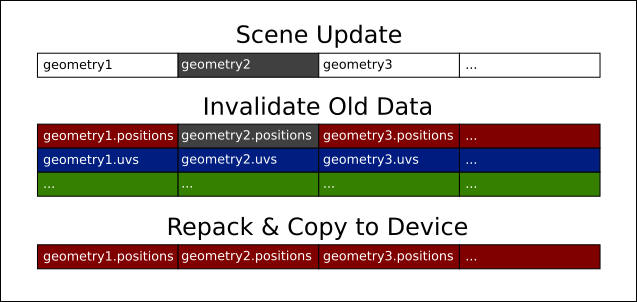

The solution, proposed by Feng, was to preserve the buffers, as long as the number of objects or their topologies remain unchanged, but to copy into the buffers only the data of the objects that have changed. For example, if only the positions of a mesh have changed, only its positions are copied to the global buffer, while no other data is copied. Previously, all data (UVs, attributes, etc.) had to be copied. In the same way, if only a single mesh changes in the scene, only it will have its data copied: no other copy of any other data of any other object will be made.

Change detection is done through a new API for the nodes of which the Cycles scene is composed (not to be confused with the shading nodes). While in the past client applications could have direct access to the nodes’ sockets, these are now encapsulated and have specific flags to indicate whether their value comes from a modification. These flags are set when setting a new value in the socket if it differs from the previous one. So, for each socket, we can now automatically detect what has changed when synchronizing data with Blender or any other application. Having this information per socket is crucial to avoid doing unnecessary work, and this with a greater granularity than Cycles could do until now.

Another optimization was the addition of BVH refit for OptiX: BVHs of objects are kept in memory and only reconstructed if the topology of the object changes. Otherwise, the BVH is simply modified to take into account the new vertex positions.

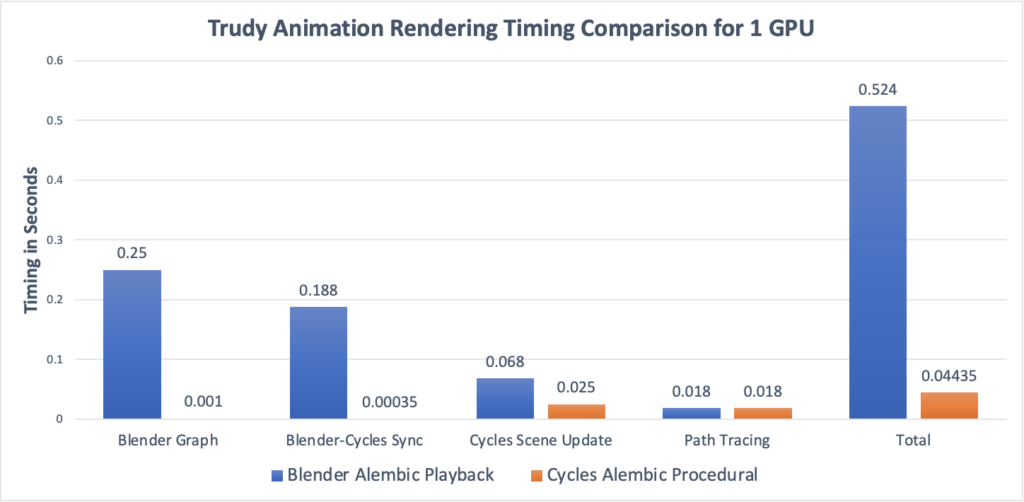

All in all, these few changes reduced the memory consumption of Cycles, and made it possible to divide the synchronization time by a factor of 2 on average. Benchmark results using FRL’s Trudy 1.0 character are shared below.

Procedural System

Another new feature was the addition of a procedural system. Procedurals are nodes that can generate other nodes in the scene just before rendering. Such a mechanism is common in other production-ready render engines.

Alembic was chosen for the concrete implementation of the first Cycles procedural. Alembic is widely used by 3D content creation systems to exchange baked geometry animations (including skin and hair deformations). In the future, we may explore developing a native USD procedural.

The Alembic procedural allows the loading of Alembic archives natively, avoiding to go through Blender and its RNA, which is slow to traverse and convert. The procedural is accessible via Blender, and when active, Blender will not load data from the Alembic archive, saving precious time. The procedural also has its own memory cache to preload data from disk to limit costly I/O operations. With this procedural we can load data much faster than from Blender, up to an order of magnitude faster.

Alembic Procedural Performance

Let’s take a look at a case study using Reality Labs’ digital character Trudy. Digital character Trudy consists of 54 meshes with 340K triangles. Rendering Trudy in Blender 3.0 using Cycles’ native alembic Procedural increases the framerate by 12x, from 2 to 24 frames per second. A detailed breakdown of the acceleration for each computation stage is shown in the table below. The most notable speed-up when using the Alembic procedural is due to the almost complete removal of Blender graph evaluation and Blender-cycles evaluation time with around 300x speed-up. (Courtesy from Feng Xie, Reality Labs Research)

Statistics: 54 objects, 340K tris, 770MB textures

Better BSDFs

The team at Facebook is also interested in improving the physical correctness of Cycles BSDFs. For example, a recent contribution saw the rendering of subsurface scattering improved via the addition of an anisotropic phase function to better simulate the interaction between light and skin (tip: human skin has an anisotropy of about 0.8). This contribution was made by Christophe Hery, the co-author of the original Pixar publication introducing this improvement to the rendering community.

Future Collaborations

Although this collaboration was previously focused on synchronization speed, it is now focused on physical correctness and path tracing speed. A patch for manifold next event estimation, which will improve the rendering of caustics, will be soon submitted for review and inclusion. There are also several optimizations still possible in the work to improve the performance of Cycles’ data transfer and memory usage (especially VRAM).

I know this is a research project, but is there a project board I can follow for updates? Is there a time frame for implementation? is it included already in the “Alembic” workboard?

The Render & Cycles workboard is the one to follow. But to be clear, this research project at Reality Labs is not something we track as part of Blender development planning. Rather it leads to various individual contributions to Cycles (anisotropic SSS, MNEE, Alembic procedural, …) that we then track like any other feature or contribution.

Is there any intention to improve cycles scalability (to import very large photogrammetry with hundreds of 8k texture, for example) using texture streaming or mipmapping ?

There is a task for that, but it’s unrelated to this project.

https://developer.blender.org/T68917

Is there going to be any grease pencil improvements in future: shadows? tracing colored images and turning them into colored vectors?

See for where to ask such questions, this is unrelated to Cycles.

https://wiki.blender.org/wiki/Communication/Contact#User_Feedback_and_Requests

The renderer will be cloud-based only or local? paid or free?

This is a research project, there’s no new renderer being announced.

Hi. This looks amazing. :) Cycles has a bright future ahead.

However, I would like to know more about the Cycles Real time capabilities in context of eevee. Isn’t this some kind of redundancy?

Cycles will always be more correct. For example, refraction in EEVEE is greatly simplified, that works on simple objects but not on a complex one. You can read this article about Cycles vs. EEVEE comparison (images are from 2.80 but text updated to 3.0):

https://cgcookie.com/articles/blender-cycles-vs-eevee-15-limitations-of-real-time-rendering

And big memory consumption is a problem, especialy in complex scenes.

Additionaly making cloud based realtime renderer is pretty straghtforward for a path tracer, see paper linked in article. For EEVEE it is not clear if such relatively simple approach will work.

Wow

In order to prevent spam, comments are closed 15 days after the post is published. Feel free to continue the conversation on the forums.